How to scrape data from website into excel: Quick Guide

- Nov 27, 2025

- 14 min read

Pulling data from a website directly into Excel can feel like a superpower, especially if you're in marketing, finance, or research. It's the key to turning messy web pages into clean, usable data for analysis. This guide cuts through the technical jargon and lays out several practical ways to get this done, from simple, no-code options like Excel's built-in Power Query to more flexible solutions using Python. We'll walk through how you can automate grabbing live data for everything from competitor tracking to market research.

Why Scrape Data from a Website into Excel

Learning how to scrape data from a website into Excel is about moving from tedious manual work to smart automation. Forget endless copying and pasting of product prices, stock figures, or customer reviews. Setting up a scraper automates the entire process. Not only does this save a massive amount of time, but it also means your data is more accurate and always fresh.

For any modern business, this isn't just a nice-to-have; it's a competitive edge. Think about it: e-commerce stores can monitor competitor prices in near real-time, financial analysts can pull historical stock data for their models, and marketers can track social media trends for campaign feedback. It’s become a core part of making informed decisions. The web scraping market is booming for this very reason, projected to hit over $1.1 billion by 2025 as more companies rely on automated data feeds. You can read more about the web scraping industry benchmarks to see how businesses are putting these tools to work.

Choosing the Right Method for Your Needs

Not every scraping project is the same, so the right tool really depends on the complexity of the website and your own technical skills. We'll cover a few solid techniques in this guide, each with its own strengths. You’ll see everything from user-friendly features already inside Excel to more powerful coding-based approaches.

To help you decide, here's a quick comparison of the data scraping methods we'll cover, highlighting their difficulty, best use cases, and whether they require coding.

Comparing Methods to Scrape Data into Excel

Method | Difficulty Level | Coding Required? | Best For |

|---|---|---|---|

Excel Power Query | Easy | No | Importing clean HTML tables, simple data refreshes |

Python Scripts | Moderate to Advanced | Yes | Complex, large-scale, and dynamic websites |

Browser Extensions | Very Easy | No | Quick, one-off data grabs from simple pages |

VBA Scripts | Moderate | Yes | Custom automation directly within Excel |

ScrapeUnblocker API | Easy | Minimal | Bypassing blocks, CAPTCHAs, and handling JavaScript |

By getting a feel for these options, you can pick the one that best fits your needs and start turning raw web data into organized, actionable insights right inside your spreadsheets.

No-Code Scraping with Power Query

If the idea of writing code makes you want to close this tab, don't worry. The simplest way to scrape data from a website into Excel is already waiting for you inside Excel itself. It’s called Power Query, and you can find it under the "Get Data" menu. This is a fantastic no-code tool, especially for beginners who just need to grab structured data, like the kind you find in HTML tables.

Think of those pages with neatly organized tables: stock prices, product specs, or sports stats. Instead of the soul-crushing task of copying and pasting, Power Query creates a direct, refreshable link between that website and your spreadsheet. It’s a game-changer.

Your First Steps with Power Query

Getting started is surprisingly painless. You don’t need to know a thing about HTML or web requests. All you need is the URL of the page with the data you want.

Just head over to the Data tab in Excel and look for the "From Web" option. Excel will ask for a URL. Pop in the link, and Power Query will immediately start scanning the page, looking for any tables it can pull in.

What you'll see next is the Power Query Navigator, which shows a list of all the tables it found on the page. You can click on each one to see a preview.

This preview feature is brilliant because it takes all the guesswork out of the process. You can see exactly what you’re getting before you commit to importing it.

Transforming and Cleaning Your Data

Here’s where the real magic happens. Once you pick a table, Excel doesn't just dump the raw, messy data into your sheet. It opens the Power Query Editor, a visual tool where you can clean everything up before it even touches your workbook.

This is where you can roll up your sleeves and:

Remove useless columns: Get rid of anything you don't need with a quick right-click.

Fix data types: Tell Excel that a column of prices should be a number, not text, so you can actually do math with it.

Filter out junk: Only want to see products under $50? Easy. Just add a filter.

Split columns: Have a column with "City, State"? You can instantly break that into two separate columns.

Power Query is more than just an import tool; it’s a lightweight ETL (Extract, Transform, Load) engine inside Excel. It empowers you to build a repeatable process for cleaning web data, saving countless hours on manual data prep.

Let's say you're scraping real estate listings, and the price shows up as "$500,000". That dollar sign and comma make it useless for calculations. Power Query can automatically strip those out, leaving you with a clean number you can use in formulas and charts right away. This transformation step is what makes the scraped data truly useful.

Setting Up Automatic Data Refreshes

One of the biggest wins with Power Query is automation. Once you've set up the connection and all your cleaning steps, you're not stuck with a one-time data pull. You can tell Excel to refresh the data automatically.

You can set it to update:

Every time you open the workbook.

On a schedule, like every 60 minutes.

This turns your spreadsheet from a static report into a live dashboard. If you're tracking something that changes often, like competitor pricing or inventory levels, this auto-refresh feature is invaluable. It keeps your analysis current without you having to lift a finger.

When Excel’s built-in tools just can't cut it, Python is the way to go. If you're dealing with complex websites, need to scrape data at a large scale, or want complete control over the process, nothing beats a custom Python script. Yes, it involves a bit of code, but the power and flexibility you get in return are immense.

With Python, you can build a scraper that targets the exact data you need with surgical precision. It allows you to automate the entire workflow, from fetching web pages to cleaning up the data and dropping it neatly into an Excel file.

This is what a typical scraping setup looks like in practice. Your script is essentially a set of instructions telling the program exactly what to look for on a webpage and how to structure that information before saving it.

The Python Web Scraping Toolkit

To get started, you really only need a handful of essential libraries. Think of these as your specialized tools for grabbing, parsing, and organizing web data.

Requests: This is your first stop. The Requests library makes it incredibly simple to send an HTTP request to a website and pull down its raw HTML source code.

BeautifulSoup: Once you have the HTML, you need to make sense of it. BeautifulSoup is fantastic at this, turning a messy nest of tags into a clean, searchable structure you can easily navigate.

Pandas: The undisputed king of data manipulation in Python. With Pandas, you can take your scraped data, arrange it in a table-like format called a DataFrame, and then export it to an Excel (.xlsx) or CSV file with a single command.

These three work together beautifully. Requests fetches the page, BeautifulSoup extracts the data, and Pandas gets it ready for Excel. For a more detailed walkthrough, check out our practical guide to BeautifulSoup web scraping.

Example: Scraping a Simple, Static Website

Let’s say you want to pull product names and prices from a basic e-commerce site. The kind where all the content loads right away in the initial HTML, with no complex JavaScript involved. This is the perfect job for Requests and BeautifulSoup.

First, your script uses to download the page content. You'd then pass that raw HTML over to BeautifulSoup. By using your browser's "Inspect" tool, you can quickly find the HTML tags and classes that contain the data you're after—something like for the name and for the price.

From there, your Python script tells BeautifulSoup to find all elements that match those selectors. It loops through each product it finds, pulls out the text for the name and price, and adds them to a list.

The final step is pure simplicity. You use Pandas to load that list into a DataFrame and then save it. The command is all it takes to create a clean Excel spreadsheet, ready for analysis.

What About JavaScript? Tackling Dynamic Sites with Selenium

But what if the data isn't there when the page first loads? Modern websites often use JavaScript to fetch and display content after the initial HTML has been delivered. If you try to scrape these sites with just the Requests library, you'll end up with a blank slate because it can't run JavaScript.

That’s where a tool like Selenium becomes essential. Selenium works by automating a real web browser, like Chrome or Firefox. Your script can control the browser to perform actions just like a person would: click buttons, scroll down the page, or wait for dynamic content to appear.

Selenium sees the final, fully-rendered page inside a real browser. This gives it access to data that would otherwise be invisible to simpler scrapers, making it a must-have for scraping modern web apps.

For instance, on a page with an "infinite scroll" feature, you can program Selenium to keep scrolling down, triggering the JavaScript that loads more items. Once the new content is visible in the browser, Selenium grabs the updated page source, which you can then feed into BeautifulSoup just like before.

It’s no surprise that these more sophisticated scraping techniques are on the rise. The web scraping software market recently hit $1.01 billion and is projected to climb to $2.49 billion by 2032. Businesses are increasingly relying on this data for market analysis and competitive intelligence. In fact, industry reports show that 39.1% of developers now use proxies with their scrapers to access data reliably without getting blocked. This trend really underscores the move toward more robust solutions for getting valuable data off the web and into tools like Excel.

More Scraping Tools for Excel

While Power Query and Python are the heavyweights for data extraction, they aren't your only options. Sometimes you need a different kind of tool—maybe for a quick, one-off job or for a project that involves a website with tough anti-scraping defenses.

Let's look at a couple of fantastic alternatives that fill these gaps. These methods are perfect when you need to scrape data from a website into Excel but don't want the setup of Power Query or the learning curve of a full-blown Python script. They bring different strengths to the table and are valuable additions to any data gatherer's toolkit.

Browser Extensions for Quick Data Grabs

Picture this: you're on a website and just need to grab a list of names, prices, or addresses for a spreadsheet. You don't need a complex scraper; you just need the data right now. This is the perfect job for a browser extension.

These lightweight tools plug right into Chrome or Firefox, letting you visually point, click, and export data in seconds. Think of it as a smarter, more powerful copy-and-paste.

A few popular extensions that get the job done are:

Data Miner: A favorite for many, it comes with pre-built "recipes" for scraping popular sites like Amazon or LinkedIn automatically.

Scraper: A simpler tool. You just right-click an element on the page and tell it to find all the similar ones.

Web Scraper: Lets you build a visual "sitemap" to navigate through multiple pages and extract data along the way, all without writing code.

After you’ve selected the data, these extensions usually let you download it as a CSV or XLSX file, ready to be opened directly in Excel. It's the ultimate low-effort solution for simple, immediate data needs.

Dedicated Tools for Tough Scraping Challenges

Now for the other end of the spectrum. Some websites actively fight back against scrapers. They use JavaScript to load content dynamically, throw up CAPTCHAs to check if you're human, and block IP addresses that send too many requests. Trying to use basic tools on these sites is a recipe for frustration.

This is where dedicated scraping services like ScrapeUnblocker come into play. These platforms are engineered from the ground up to handle the hard parts of web scraping for you.

A dedicated scraping API is like a specialist you hire for a tough job. You give it a URL, and it uses a huge network of proxies and advanced browser rendering to visit the page like a real person, returning clean, structured data directly to you.

Instead of you having to build and manage a complex system of proxies, CAPTCHA solvers, and headless browsers, you just send a single API request. The service takes care of all the messy details behind the scenes.

How These Services Work with Excel

You might be surprised at how easily these professional tools fit into an Excel workflow. The process is pretty straightforward:

First, you send a request to the service's API, telling it which URL to scrape.

The service then navigates the site, running JavaScript and solving any anti-bot puzzles it encounters.

It returns the data in a structured format like JSON, or sometimes it gives you a direct link to download a CSV file.

Finally, you import that data into Excel. If you get JSON, you can use Power Query's "From Web" feature. If it's a CSV, it's just a simple one-click import.

This approach gives you the raw power of an industrial-grade scraper with the simplicity of opening a file in Excel. It’s the best of both worlds, especially when you're up against dynamic websites that make other methods fall flat.

While a tool like Selenium can also handle JavaScript, mastering it for reliable, large-scale scraping is a major project. For a closer look at what it takes, check out our guide on Selenium web scraping in Python. Using a dedicated service offloads all that complexity, so you can focus on the data, not the fight to get it.

Best Practices for Ethical Web Scraping

Pulling data successfully is one thing, but doing it responsibly is what separates the pros from the amateurs. Ethical scraping is all about getting the information you need without hammering a website's server, getting your IP banned, or wandering into a legal minefield. It's like being a good guest at someone's house—you don't cause a scene.

This isn't just about being a good digital citizen, either. It directly impacts the reliability of your scrapers. When you play by the rules, your scripts are far less likely to break or get blacklisted, ensuring a steady, consistent flow of data.

Check the Rules of Engagement First

Before you even think about writing code or firing up a tool, your very first stop should be the website's file. You can almost always find it by just tacking onto the end of the site's main domain (like ). This little text file is the site owner's instructions for bots, clearly stating which pages are off-limits.

Next, find the website’s Terms of Service (ToS). It might be dry reading, but it's the legal rulebook for using the site and usually contains specific clauses about automated data collection. Following these guidelines is the most important step you can take.

Scrape Politely, Not Aggressively

Once you've cleared the rules, it's time to think about how you scrape. An aggressive scraper can easily slow a site down for human visitors or even crash it, which is the fastest way to get your IP address permanently blocked.

Here are a few simple but crucial practices I always follow:

Pace Yourself. Don't bombard the server. Implement a delay between your requests, a practice known as rate limiting. Firing off hundreds of requests a second screams "malicious bot." A simple pause of a few seconds is often all it takes to go unnoticed.

Be Honest About Who You Are. Use a clear User-Agent string in your request headers. Instead of masking your scraper as a common browser, identify it. Something like "DataProjectBot/1.0" is transparent and shows you're not trying to be sneaky.

Scrape at Quiet Times. Whenever possible, schedule your scraper to run during the site’s off-peak hours, like late at night. This lightens the load on their servers when it matters most.

Scraping politely builds a sustainable data pipeline. An aggressive scraper might get you data quickly once, but a considerate one can gather data reliably for months or even years without getting shut down.

The web scraping market is exploding for a reason. It's projected to hit $501.9 million by 2025 and is on track to reach a staggering $2.03 billion by 2035. This growth is fueled by businesses that depend on a reliable stream of data, which can only be achieved through sustainable scraping. You can explore more on the growing web scraping market to see the trends. For a more detailed look at these techniques, check out our guide on 10 web scraping best practices for developers in 2025.

Choosing the Right Scraping Tool for the Job

So, where do you go from here? We’ve covered a lot of ground, from Excel’s own Power Query to the raw power of Python scripting. You now have a full arsenal of methods to scrape data from a website into Excel.

We've seen that Power Query is a brilliant starting point for anyone who lives in spreadsheets and doesn't want to code. On the other end of the spectrum, Python offers you complete control for massive, complex projects that need to be customized from the ground up.

And let's not forget the middle ground. Browser extensions and specialized tools each have their place, offering either a quick fix for a simple task or some serious muscle for those notoriously difficult websites. The real key is knowing which tool to pull out of the bag for the specific job you have in front of you.

Matching the Method to Your Mission

Before you write a single line of code or click any buttons, think about the project's complexity. Is the data you need sitting nicely in a clean HTML table? Power Query will probably get you there in minutes. It’s simple, effective, and built right into Excel.

But what if you need to pull product details from thousands of pages on a modern e-commerce site loaded with JavaScript? That’s a completely different ballgame. For that, you’ll need to roll up your sleeves with a Python script or use a powerful API like ScrapeUnblocker that handles all the anti-bot headaches for you.

Your own comfort with technology matters just as much. There's no shame in using a simple browser extension if it gets you the data you need in 30 seconds. The goal here is getting reliable data efficiently, not winning a coding competition.

The best web scrapers don't just master one tool; they build a versatile skillset. When you understand what each method does best, you can always choose the most direct path from raw web data to valuable insights in your spreadsheet.

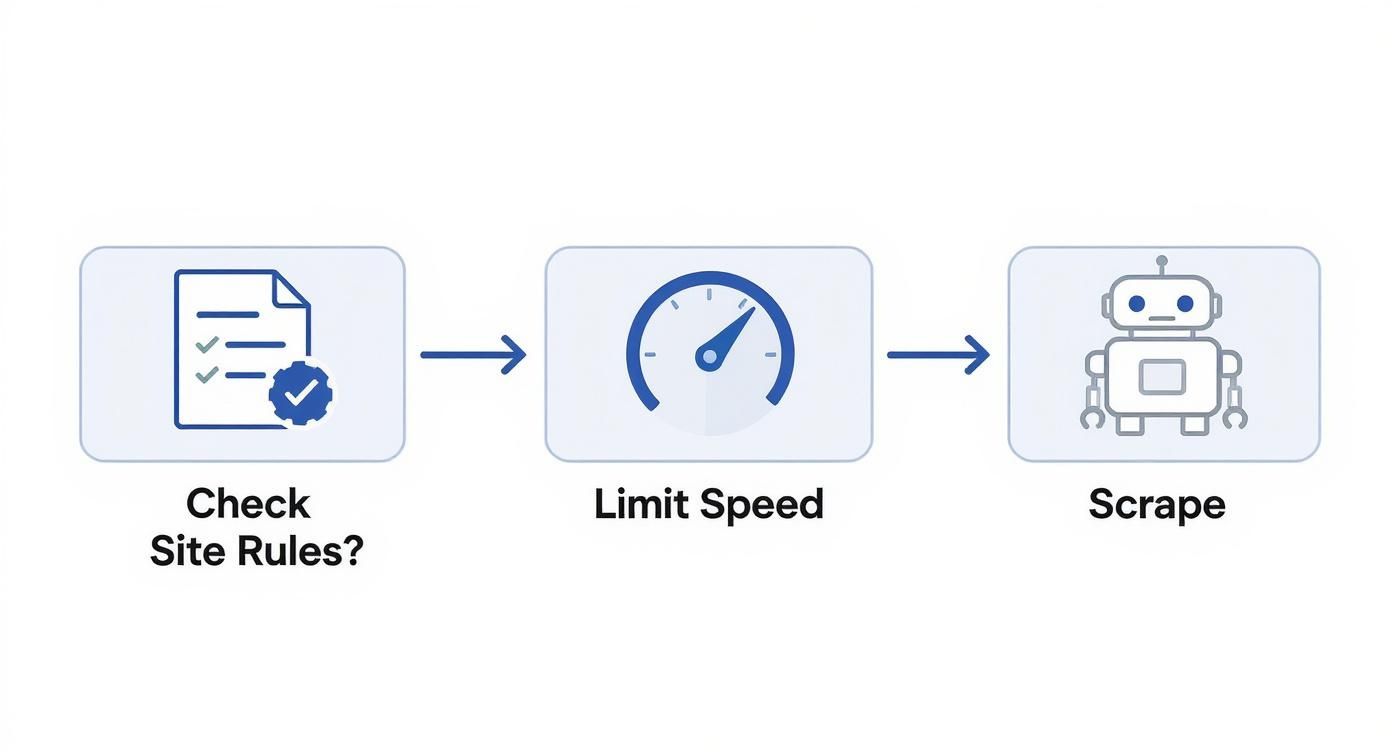

This flowchart lays out a simple, ethical mindset for approaching any new scraping project.

It really boils down to two fundamental first steps: always check the website’s rules (robots.txt) and be a good internet citizen by limiting how fast you send requests.

By starting with a clear objective and sticking to these responsible practices, you can confidently turn the web's endless information into clean, structured data right inside Excel. That's how you start making smarter, data-backed decisions.

Frequently Asked Questions

When you're getting into web scraping, a few key questions always come up. Let's tackle the big ones—legality, logins, and what to do when your scraper inevitably breaks.

Is It Legal to Scrape Data from Any Website?

This is the million-dollar question, and the answer isn't a simple yes or no. For the most part, scraping data that's publicly available is okay, but you can't just go wild. The first thing I always do is check a site's file and its terms of service. These documents are the rulebook.

As a general guideline, stay far away from scraping:

Personal data protected by privacy laws like GDPR or CCPA.

Copyrighted material like articles, photos, or unique data sets.

Anything behind a login screen, since that almost always violates the site's terms.

If you stick to public, non-sensitive information and play by the site's rules, you'll stay on the right side of the ethical and legal lines.

How Can I Scrape Data from a Website That Requires a Login?

Pulling data from behind a login is a whole different ballgame—it's technically tricky and ethically murky. Your average tool, like Excel's Power Query, just can't do it because it can't handle login sessions.

This is where you need to bring out the bigger guns. A Python script using a library like Selenium can automate a real browser to log in just like a person would. Specialized scraping services can also handle this. But—and this is a big but—you absolutely must have the right to access and pull that data. Unauthorized scraping behind a login can get your account banned or worse.

Scraping behind a login should be approached with extreme caution. Always confirm you are not violating the platform's user agreement, as accessing data in this manner often crosses a line from public data gathering to unauthorized access.

What Should I Do If a Website Structure Changes?

It's not a matter of if a website changes its layout, but when. And when it does, your scraper will break. It's just a fact of life in this field.

If you built your scraper with Power Query, the fix is usually pretty simple. You can just go back into your query, edit the steps, and point it at the new HTML table.

With a Python script, you'll have to put on your detective hat. Pop open the webpage, right-click and "Inspect" the new HTML, and figure out what changed. Then, you just update your script's selectors (like the CSS classes or element IDs) to match the new design. A pro tip is to build good error handling and logging into your scripts from day one; it makes finding and fixing these breaks so much faster.

Comments