How to Scrape Google Search Results A Practical Guide

- John Mclaren

- Nov 20, 2025

- 16 min read

Scraping Google search results is all about using automated scripts, or "bots," to pull data directly from the Search Engine Results Pages (SERPs) on a large scale. At its simplest, you're sending a request to Google, just like a browser does, then sifting through the HTML it sends back to grab the good stuff—titles, links, descriptions, and more.

It sounds straightforward, but this process unlocks some serious market intelligence. The trick is learning how to do it without getting blocked, which means getting smart about things like proxies and user-agents.

Why Bother Scraping Google Search?

Before we jump into the code and the how-to, let's talk about the why. What makes this data so valuable? Think of Google as a live, constantly updating reflection of human curiosity, market trends, and your competitors' every move. Pulling data from it isn't just a technical challenge; it’s a way to get actionable insights that can give you a real advantage.

The scale here is almost hard to comprehend. Google handles around 9.5 million search queries every single minute. That adds up to more than 5 trillion searches a year, making SERPs one of the most dynamic and rich sources of real-time data on the planet. For a deeper dive into the numbers, check out the analysis on RoundProxies.com.

How Businesses Use This Data in the Real World

For most businesses, SERP data is a goldmine. Here are a few common scenarios I've seen play out:

Competitive Analysis: An e-commerce brand can keep a constant eye on competitor prices by scraping product listings and price snippets. This lets them react instantly, adjusting their own pricing to stay in the game.

Smarter SEO Strategy: A marketing agency can uncover valuable keyword gaps by analyzing what's already ranking. Seeing the top results and what people are asking in the "People Also Ask" boxes tells them exactly what kind of content they need to create to meet user demand.

Spotting Market Trends: Imagine a research firm tracking search volumes over time. A sudden jump in searches for a new type of product can be the earliest signal of a new consumer trend, long before it ever shows up in a formal market report.

The real magic of scraping isn't just about grabbing data. It’s about turning that raw SERP information into a clear roadmap for making smarter business decisions. You stop guessing what your audience wants and start knowing what they're actively looking for.

Facing the Inevitable Hurdles

Of course, it's not all smooth sailing. Google works hard to prevent automated scraping. You'll run into CAPTCHAs, IP bans, rate limiting, and constantly changing HTML layouts designed to break your scripts.

Getting good at scraping Google means anticipating these roadblocks from the start. This guide is designed to give you the strategies you need to build scrapers that are not only reliable but also ethical, allowing you to navigate these challenges effectively.

Decoding the Modern Google SERP

Before you can even think about scraping Google, you have to truly understand what you're looking at. The days of ten blue links are long gone. Today’s Search Engine Results Page (SERP) is a dynamic, modular beast, and treating it like a simple webpage is the first mistake most people make.

Think of it less as a page and more as a structured database that's just disguised as a user interface. Your job is to become a sort of digital archaeologist, learning to spot and extract the valuable artifacts from this complex layout.

The real challenge—and where the most valuable data lies—is in the rich SERP features Google constantly experiments with. A scraper built just to pull organic links will miss a massive amount of context and insight.

Identifying Key SERP Components

The first real step is to map the territory. A single SERP is a collection of distinct, independent components, and you need to know how to target each one.

Here are the most common elements you'll be dealing with on any given search:

Organic Results: The classic bread and butter. Each one contains a title, a display URL, the actual link, and a descriptive snippet.

Featured Snippets: That coveted "position zero" block right at the top. It’s Google’s attempt to answer a question directly, and scraping it gives you what the algorithm considers the most authoritative, concise answer.

"People Also Ask" (PAA) Boxes: This is an absolute goldmine for keyword and content research. These expandable boxes show you the exact follow-up questions real users are asking.

Local Pack: For any search with local intent (like "pizza near me"), this is the block with a map and 3 business listings. It's packed with crucial data for local SEO and market research, including addresses and ratings.

Shopping Ads & Product Carousels: Triggered by commercial queries, these visual blocks are essential for e-commerce intelligence. They give you product images, prices, and seller info at a glance.

Key Takeaway: The secret to a robust scraper is to stop thinking about the page as a whole. Instead, build your parser to target these features individually. You need separate logic for a PAA box than you do for an organic link.

The Ever-Changing Landscape

Here’s the hard truth: Google’s SERP is not static. The layout, HTML structure, and CSS classes can—and will—change without any warning. This is the #1 reason why homemade scrapers break.

A perfect example is Google’s 2025 update aimed at limiting results to just 10 links and removing traditional pagination. Many see this as a direct move to make large-scale automated data collection much harder. You can read more about Google's evolving SERP design at Scorpion.co.

This is why relying on hyper-specific CSS selectors is a recipe for disaster. A selector like is incredibly brittle and will fail the moment Google pushes an update. A much better approach is to look for broader, more stable patterns or, even better, use a dedicated service that handles the nightmare of parser maintenance for you. This way, your data pipeline keeps running even when Google decides to rearrange the furniture.

Build Your First Google Scraper with Python

Alright, enough theory. Let's roll up our sleeves and build something. We're going to put together a basic Google scraper using Python, which is the go-to language for this kind of work. Our toolkit is simple but incredibly effective: the Requests library for talking to the web and Beautiful Soup for making sense of the HTML that comes back.

The mission here isn't to build a beast that can hammer Google with a million queries a minute. Not yet. The real goal is to understand the core mechanics—how to ask for a search results page and then pick apart the code to find what you're looking for.

By the time you're done with this section, you'll have a script that can pull data from a single SERP. That first successful run is a huge step, and it gives you the foundation for everything that comes next.

Sending the Initial Request

First things first, we have to act like a browser. That means sending a request to a URL and grabbing the raw HTML it sends back. Python's library is perfect for this; it’s clean and easy to understand.

But here’s the catch: you can't just send a blank request. Websites like Google are smart. They look at incoming requests for clues to figure out if they're from a real person or a script. The most fundamental clue is the User-Agent header. A User-Agent is just a string of text your browser sends to introduce itself, like "I'm Chrome on a Windows PC" or "I'm Safari on a Mac."

Sending a request without a realistic User-Agent is like showing up to a formal event in sweatpants. It immediately signals that you don't belong, making your script an easy target for being blocked.

Let's look at a quick code snippet that includes this vital header. We'll search for "how to scrape google search results" to keep things meta.

import requests

Define the search query and construct the URL

query = "how to scrape google search results"url = f"https://www.google.com/search?q={query.replace(' ', '+')}"

Set a realistic User-Agent header

headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'}

Send the GET request

response = requests.get(url, headers=headers)

Check if the request was successful

if response.status_code == 200: print("Successfully fetched the page!") # We will process response.text in the next stepelse: print(f"Failed to fetch the page. Status code: {response.status_code}")

This code builds a proper Google search URL, tacks on a believable User-Agent, and fires off the request. If you get a status code of 200, that’s the green light—it means success! The variable now holds the raw HTML of the search results page, ready for us to dig into.

Parsing HTML with Beautiful Soup

Okay, we have the HTML. Now what? Raw HTML is just a messy blob of text and tags. To make any sense of it, we need a tool that can turn that chaos into an organized, searchable structure. That's exactly what Beautiful Soup was made for. It takes that wall of text and transforms it into a neat tree of objects we can navigate easily.

If you want a deeper dive into these tools for scraping in general, our guide on how to scrape a website with Python is a great place to start.

To actually parse the SERP, you have to become a bit of a detective. Using your browser's developer tools (just press F12 or right-click and "Inspect"), you can poke around the page's structure to find the HTML tags and CSS classes that wrap the data you need.

You'll quickly notice patterns. For example, all the organic search results usually sit inside elements that share a common class. Within each of those containers, you can typically find:

The title, often inside an tag.

The URL, tucked away in the attribute of an tag.

The description snippet, sitting in another with its own class.

Extracting Titles URLs and Descriptions

Now we put it all together. We’ll take the from our request and hand it over to Beautiful Soup. Then, we can tell it to find all the organic result containers and loop through them, plucking out the title, link, and description from each one.

Here’s a pro tip for building a scraper that doesn't break easily: plan for failure. You have to account for inconsistencies. What if a search result is a video and doesn't have a description snippet? If your code expects a description every time, it will crash. Using blocks or conditional checks makes your script resilient, so one weird result doesn't bring the whole operation down.

This complete script builds directly on what we've already done:

import requestsfrom bs4 import BeautifulSoup

query = "how to scrape google search results"url = f"https://www.google.com/search?q={query.replace(' ', '+')}"headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36'}

response = requests.get(url, headers=headers)

if response.status_code == 200: soup = BeautifulSoup(response.text, 'html.parser') results = []

# NOTE: These selectors can change. Always inspect the current SERP structure.

for g in soup.find_all('div', class_='tF2Cxc'):

title = g.find('h3')

link = g.find('a')

description = g.find('div', class_='VwiC3b')

# Handle cases where an element might be missing

title_text = title.text if title else 'No Title Found'

link_href = link['href'] if link else 'No Link Found'

description_text = description.text if description else 'No Description Found'

results.append({

'title': title_text,

'link': link_href,

'description': description_text

})

print(results)else: print(f"Failed to fetch the page. Status code: {response.status_code}")

Run this, and you'll get a clean list of dictionaries printed to your console, where each dictionary is a neatly organized search result. Congratulations—you've just successfully scraped your first Google SERP.

So, you've got a basic scraper up and running. If you've tried it, you've probably already hit the biggest wall in this game: Google's seriously impressive anti-bot defenses. Firing off repeated requests from the same IP address is like setting off a flare. It won’t be long before you’re staring at CAPTCHAs or, even worse, a total block.

This is where the real craft of web scraping comes into play. Getting past these defenses isn't about brute force; it's about being clever and subtle. Your goal is to make your scraper look and act just like a real person browsing the web. That means thinking about your digital footprint, mimicking browser behavior, and not hammering their servers. If you skip these steps, any attempt to scrape Google at scale is basically dead on arrival.

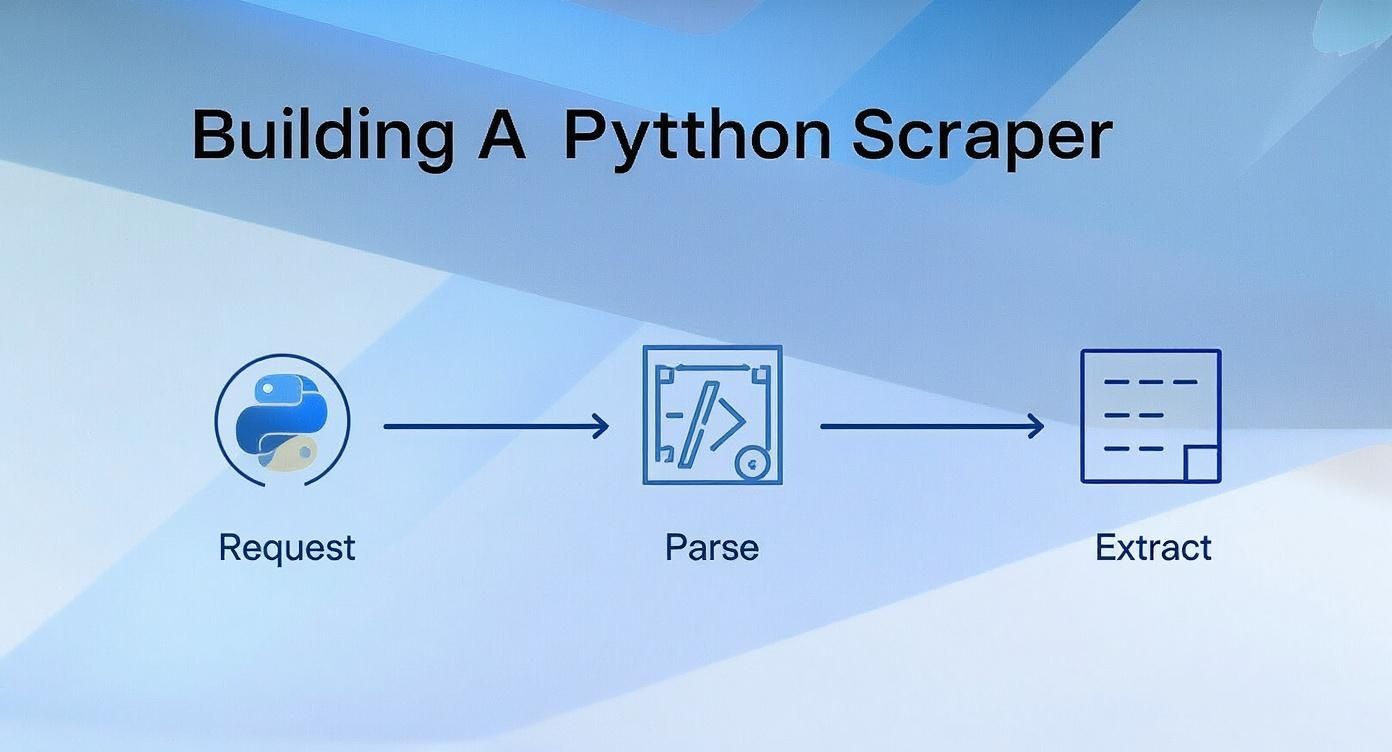

The whole process, from request to data, can seem simple on the surface.

This flow shows the nuts and bolts, but successfully staying under the radar requires adding a few more layers of sophistication to this model.

The Power of IP Rotation

The first and most critical tactic is IP rotation. Think about it: if all your requests flood in from one IP address, Google’s systems will spot that unnatural activity in a heartbeat. An IP ban is swift, and just like that, your scraper is shut down. The answer here is proxies.

A proxy server is basically a middleman. It takes your request and forwards it to Google, masking your real IP with its own. When you use a whole pool of these proxies, you can spread your requests across hundreds or even thousands of unique IP addresses. This makes it incredibly difficult for Google to piece together that all this activity is coming from a single source.

Proxy Type Comparison for Google Scraping

Choosing the right proxy is a big decision that balances cost, performance, and how "human" you appear. Here's a quick breakdown of your main options.

Proxy Type | Anonymity Level | Cost | Best Use Case for Google Scraping |

|---|---|---|---|

Datacenter | Low | $ | Good for initial testing, but easily detected and blocked by Google for any serious scraping. |

Residential | High | $$ | The sweet spot. These are real home IP addresses, making them look like genuine users. Ideal for most Google scraping tasks. |

Mobile | Very High | $$$ | The top tier. Traffic comes from mobile carriers, making it almost impossible to distinguish from a real user on a phone. Use for the toughest targets. |

Each type has its place, but for scraping a target as sophisticated as Google, residential proxies are usually the most reliable starting point. For a more detailed look into this, we have a complete guide on rotating proxies for web scraping. The trick is matching the proxy type to your project's needs and budget.

Mimicking Human Behavior

Google looks at a lot more than just your IP. To avoid detection, your scraper needs to present a digital signature that looks just like a real browser.

This starts with something we've touched on: User-Agents. Sending the same User-Agent string with every single request is a classic rookie mistake. A much smarter move is to keep a list of current, common User-Agents (think recent versions of Chrome, Firefox, and Safari on different operating systems) and cycle through them randomly.

But it gets deeper. You also have to contend with browser fingerprinting. Modern browsers share a ton of data that creates a unique "fingerprint"—things like your screen resolution, installed fonts, browser plugins, and even how your graphics card renders things. Anti-bot systems collect this data to profile visitors. If your script provides an incomplete or inconsistent fingerprint, it’s an immediate giveaway.

This is why you often need to go beyond simple requests and use headless browsers powered by tools like Selenium or Playwright. These tools can actually render a full webpage, execute JavaScript, and generate a complete, convincing browser fingerprint that looks legitimate.

Expert Insight: The game is won or lost on the details. Rotating IPs and User-Agents is the baseline. True resilience comes from managing the full browser fingerprint and ensuring your request patterns—like the time between requests—don't follow a predictable, robotic rhythm.

Smart Throttling and Rate Limiting

Even with the perfect disguise, no human can send thousands of search queries in a few seconds. This is where smart throttling becomes essential. Instead of hitting Google's servers as fast as your connection allows, you need to introduce strategic, randomized delays between your requests. This is often called a "backoff" strategy.

A simple way to do this is to just pause for a few seconds between each request. A more robust technique is an exponential backoff algorithm. If your scraper gets an error or a CAPTCHA page, you don't just retry immediately. Instead, you significantly increase the waiting period before the next attempt. This signals to the server that you're "backing off" in response to rate limiting, which is far less aggressive than a bot that just keeps hammering away. It's not just about avoiding blocks; it's also a more ethical way to scrape that respects the server's resources.

Scraping Google is a popular game. In fact, it's estimated to account for about 42% of all web scraping activity as of 2025. You can find more details on these web crawling benchmarks on Thunderbit.com. This number alone explains why Google invests so much in its anti-bot tech and why you need a multi-layered defense. By combining IP rotation, realistic browser emulation, and smart rate-limiting, you can build a scraper that’s not just effective, but built to last.

Scale Your Scraping with a SERP API

Building your own scraper is a fantastic way to learn the ropes, but it doesn't take long to hit a wall. As soon as you try to scale up, you'll find yourself drowning in the hidden costs of maintenance. All your time goes into managing proxy pools, updating parsers after a minor HTML change, and fighting a losing battle against CAPTCHAs.

At this point, you have a choice: keep patching a fragile script or start working smarter. The professional approach is to offload all that heavy lifting to a specialized tool. This is exactly where a dedicated SERP scraping API comes in. It’s a strategic shift from building and maintaining infrastructure to simply asking for the data you need.

What Is a SERP API and Why Use One?

Think of a SERP API as an expert team on retainer. Instead of building the car from scratch, you just tell a professional driver where you want to go. You make a single, simple API call with your search query and any parameters you need, like a specific location or language.

That's it. The API service handles all the messy, frustrating parts on the backend:

Proxy Management: It automatically rotates through thousands of residential and mobile IPs, making every request look like it's coming from a unique, real user.

CAPTCHA Solving: Sophisticated systems solve CAPTCHAs on the fly, so your requests never get stuck.

Browser Emulation: The service manages everything from user agents to full browser fingerprints to mimic genuine human behavior.

Parser Maintenance: A dedicated team is on standby to update HTML parsers the moment Google tweaks its SERP layout, ensuring your data structure remains consistent.

What do you get back? Clean, structured JSON. This completely removes the need for or any local parsing libraries. The time you save is massive, letting you focus on analyzing data instead of just trying to get it.

Integrating an API into Your Python Script

Let's see just how much this simplifies the Python script we built earlier. Instead of wrestling with and to parse raw, messy HTML, we can get structured data directly using a service like ScrapeUnblocker.

The entire logic changes. No more hunting for CSS selectors, inspecting HTML, or writing complex blocks to handle missing elements. You just define what you want to search for and let the API do all the work.

import requestsimport json

Your ScrapeUnblocker API credentials

api_key = 'YOUR_API_KEY'

Define the search parameters

payload = { 'query': 'how to scrape google search results', 'country': 'us', # Target results from the United States 'google_domain': 'google.com'}

The API endpoint for Google Search

api_url = 'https://api.scrapeunblocker.com/google/search'

try: response = requests.post( api_url, params={'api_key': api_key}, json=payload )

response.raise_for_status() # Raise an exception for bad status codes (4xx or 5xx)

# The API returns structured JSON data directly

search_results = response.json()

# Print the organic results in a readable format

print(json.dumps(search_results.get('organic_results', []), indent=2))except requests.exceptions.RequestException as e: print(f"An error occurred: {e}")

See the difference? The complexity is just gone. We send our query and location, and in return, we get a clean JSON object. This doesn't just include organic results; it can have "People Also Ask" boxes, ads, and local pack data, all neatly organized and ready to use.

Key Insight: Using a SERP API transforms scraping from a constant battle against anti-bot systems into a predictable data engineering task. You abstract away the reliability challenges and focus solely on the data itself.

If you're evaluating different providers, there are plenty of great options out there. To get a solid overview of the market and compare features, check out this guide to the 12 best web scraping API options for 2025. Making this strategic shift is how you reliably scrape Google at any real scale.

Common Questions About Scraping Google

If you're getting into Google scraping, you've probably got a few questions. It’s a field where technical know-how, legal awareness, and practical strategy all intersect. Let's break down some of the most common things people ask when they start out.

Is It Legal to Scrape Google?

This is the big one, and thankfully, the answer is pretty straightforward. Scraping publicly available data—which includes Google search results—is generally legal. Major court cases have consistently upheld the idea that if anyone can see the data with a web browser, a bot can access it too without violating anti-hacking laws.

Where things get murky is what you do with the data. If you scrape copyrighted material and pass it off as your own, you're crossing a line. The same goes for personal data. It’s always smart to run your specific project by a legal expert to make sure you’re in the clear.

Can Google Tell When You Are Scraping?

Absolutely. Google's systems for spotting automated traffic are second to none. A basic script that fires off hundreds of requests from the same IP address will get flagged almost instantly.

They’re looking for a footprint that just doesn’t look human. This includes things like:

Unnatural Speed: Firing off requests faster than any person could possibly click and type.

Repetitive Fingerprints: Using the exact same browser headers and user-agent for every single request.

No JavaScript Rendering: Most simple scrapers just fetch HTML, but modern websites expect browsers to run scripts. Not doing so is a dead giveaway.

This is exactly why sophisticated tools that rotate IPs and mimic real browser behavior aren't just a "nice-to-have"—they're a must for any serious scraping operation.

What Is the Best Data Format for Scraped SERP Data?

You could just save the raw HTML of every search result page, but that just creates another problem you have to solve later. For practical use, the gold standard is JSON (JavaScript Object Notation).

JSON is a perfect fit. It's lightweight, easy for developers to work with, and even readable for humans. More importantly, it lets you organize the scraped information into a clean, nested structure. You can have separate keys for organic results, ads, "People Also Ask" boxes, and local business listings. This makes the data immediately useful, whether you're feeding it into a database or an analytics dashboard.

Key Takeaway: The goal isn't just to get the data; it's to get it in a structured format that's ready for immediate use. APIs that deliver pre-parsed JSON save you a significant amount of development time and effort.

How Many Results Can I Scrape from Google?

While there's no hard technical limit, there are definitely practical ones. Google usually displays about 8-10 organic results per page and has largely done away with deep pagination (going to page 50, for example). If you try to scrape thousands of results for a single search term, you're going to hit a wall of CAPTCHAs and blocks very quickly.

A much better strategy for large-scale projects is to go wide, not deep. Scrape the first page or two of results for thousands of different, but related, keywords. This approach looks much more like natural human searching and will ultimately give you a richer, more valuable dataset.

Trying to manage all these complexities on your own is a full-time job. Instead of constantly tweaking parsers and fighting with blocks, let ScrapeUnblocker do the heavy lifting. Our API gives you clean, structured JSON from any Google SERP, handling all the proxy rotation, CAPTCHA solving, and browser fingerprinting for you. You can focus on what to do with the data, not how to get it.

Learn more at https://www.scrapeunblocker.com.

Comments