12 Best Web Scraping API Options for 2025

- Nov 12, 2025

- 18 min read

In 2025, modern websites are fortresses. They're built with dynamic JavaScript frameworks, guarded by sophisticated anti-bot systems like Cloudflare and Akamai, and designed to detect and block automated data collection. For developers and data engineers, this means the simple days of and are long gone. Building and maintaining a robust in-house scraping solution now requires managing headless browsers, rotating premium proxies, solving CAPTCHAs, and reverse-engineering bot detection scripts. This has become a full-time job in itself, diverting resources from core product development.

The alternative is a web scraping API, which outsources this complex infrastructure, allowing you to focus solely on parsing the data you need. But with dozens of providers all claiming to be the best, how do you choose? A poor choice leads to a cascade of problems: failed requests, incomplete or inaccurate data, unpredictable costs, and ultimately, project failure. The wrong API can be just as difficult to manage as a broken in-house system.

This guide cuts through the marketing noise. We provide a comprehensive analysis of the top contenders to help you find the best web scraping API for your specific project. We'll break down the critical evaluation criteria, from JavaScript rendering and proxy quality to pricing models and developer experience. Each review includes a direct link to the service, an honest assessment of its strengths and weaknesses, ideal use cases, and practical considerations. Whether you're extracting SERP insights, monitoring e-commerce prices, or gathering data for an AI model, this list will help you make an informed decision and get the data you need reliably.

1. ScrapeUnblocker

ScrapeUnblocker earns its top spot by offering a powerful, all-in-one web scraping infrastructure designed to tackle the most challenging modern websites. It distinguishes itself by integrating a comprehensive anti-blocking stack directly into a single API endpoint. This service is engineered for developers and data teams who need reliable, high-fidelity data without the operational burden of managing proxies, browser farms, or complex anti-bot workarounds. For those looking for the best web scraping api that balances raw power with developer-centric simplicity, ScrapeUnblocker presents a compelling, robust solution.

The platform's core strength lies in its ability to mimic human browsing behavior with near-perfect accuracy. Every API request is executed through a real browser instance, complete with full JavaScript rendering capabilities. This is combined with a premium, rotating residential proxy network, ensuring requests appear indistinguishable from organic user traffic, effectively bypassing sophisticated CAPTCHAs and bot detection systems found on sites like Amazon, Zillow, and LinkedIn.

Key Features & Use Cases

ScrapeUnblocker is built for high-stakes data extraction where accuracy and consistency are paramount. Its feature set is optimized for both ease of use and advanced control.

Advanced Anti-Bot Evasion: Combines JS rendering, real browser fingerprints, and a high-quality residential proxy network to handle dynamic, script-heavy websites.

Flexible Data Output: Developers can retrieve either raw, untouched HTML for their own parsing logic or request structured JSON data directly from the API.

Precise Geotargeting: Allows for requests to be routed through specific countries and even cities, which is critical for scraping localized search results, pricing, and content.

High Concurrency: The infrastructure supports unlimited concurrent requests, enabling massive-scale data collection for projects like training AI/ML models or building large market intelligence databases.

This makes it an ideal tool for demanding use cases such as SERP data aggregation for SEO platforms, real-time price intelligence from e-commerce giants, and large-scale data harvesting from job boards or real estate portals.

Pricing and Onboarding

ScrapeUnblocker’s pricing model is a significant advantage. It operates on a straightforward per-request basis where one API call equals one request, eliminating the complex credit systems used by many competitors. While specific pricing tiers are not publicly listed and require contacting sales, this model provides transparent and predictable costs. The company backs its service with a quality guarantee, offering to help resolve data issues or waive charges if results are unsatisfactory. Developer onboarding is streamlined with clear documentation, a sandbox for testing, and priority support.

Pros:

Robust anti-blocking stack bypasses most modern defenses.

Simple, predictable per-request pricing model.

Highly scalable with unlimited concurrency and city-level targeting.

Strong focus on data accuracy with a quality guarantee.

Developer-friendly with flexible output and support resources.

Cons:

Pricing details are not publicly available on the website.

Users are responsible for ensuring legal compliance for target sites.

Website: https://www.scrapeunblocker.com

2. Zyte – Web Scraping API

Zyte, formerly known as Scrapinghub, offers one of the most mature and developer-centric web scraping API solutions on the market. Its strength lies in its deep integration with the Scrapy framework, making it a natural choice for Python developers. The API is designed as an all-in-one data extraction tool, managing everything from proxy rotation and smart retries to headless browser rendering for JavaScript-heavy websites.

What makes Zyte stand out is its success-based billing model. You only pay for successful requests, which aligns the platform's performance with your costs and encourages adherence to web scraping best practices. This model, combined with robust SDKs for Python and Node.js, provides a reliable foundation for scaling data extraction projects from simple scripts to enterprise-level operations.

Key Features & Use Case

The platform is particularly effective for tackling difficult, well-protected targets like e-commerce sites or complex portals that rely heavily on JavaScript. Its automatic anti-bot bypassing and CAPTCHA handling mechanisms are battle-tested and highly effective.

Pros:

Success-Based Billing: Transparent pricing where you only pay for successful 2xx responses.

Mature Ecosystem: Excellent documentation and native integration with the Scrapy framework.

Scalability: Proven to handle large-scale enterprise data extraction needs.

Cons:

Complex Pricing Tiers: Costs can be difficult to forecast as they vary based on target website difficulty.

Higher Cost for JS: Heavy JavaScript rendering can significantly increase per-request costs.

3. Bright Data – Web Scraper API and Scraping Browser

Bright Data is an enterprise-grade data collection platform offering a comprehensive suite of tools, including its powerful Web Scraper API and Scraping Browser. It's recognized for its massive global IP network and autonomous unblocking capabilities, making it a go-to solution for large-scale operations tackling the most challenging websites. The platform provides a diverse product catalog designed to meet various data extraction needs, from simple URL fetching to fully managed browser-based scraping.

What sets Bright Data apart is its focus on enterprise reliability and its vast infrastructure. The platform’s strength lies in its underlying proxy network, which powers its unblocking success. This makes it one of the best web scraping API choices for businesses that require high availability, robust security features like SSO and audit logs, and predictable performance backed by service-level agreements (SLAs).

Key Features & Use Case

The Web Scraper API is ideal for structured data extraction at scale, while the Scraping Browser excels at handling complex, JavaScript-heavy sites that require browser interactions. This dual offering makes Bright Data suitable for a wide range of use cases, from e-commerce price monitoring to financial data aggregation where accuracy and uptime are critical.

Pros:

Broad Product Catalog: Extensive selection of tools for different scraping scenarios.

Battle-Tested at Scale: Proven reliability and performance for enterprise-level data collection.

Flexible Billing: Offers clear, separate pricing tiers for its record-based API and GB-based Browser.

Enterprise-Ready: Includes features like SLAs, SSO, and AWS Marketplace availability.

Cons:

Higher Cost at Low Volume: Can be pricier than leaner competitors for smaller projects.

Complex Product Selection: The extensive product lineup can make choosing the right tool initially confusing.

4. Oxylabs – Web Scraper API

Oxylabs offers a powerful and results-oriented solution with its Web Scraper API, designed to deliver parsed data from even the most complex targets. The service handles proxy management, JavaScript rendering, and CAPTCHA solving, allowing developers to focus on data utilization rather than infrastructure maintenance. Its key differentiator is a billing model centered on successful results, which brings cost predictability to large-scale data gathering projects.

What makes this one of the best web scraping API options is its use of specialized endpoints for major platforms like e-commerce marketplaces and search engines. These dedicated endpoints simplify the scraping process by providing structured JSON output tailored to the target, removing the need for manual parsing. This approach, combined with its robust proxy network, makes it an excellent choice for teams that need reliable, pre-formatted data without the hassle of managing complex scraping logic. For more information on the proxy technology that powers such tools, explore this guide on rotating proxies for web scraping.

Key Features & Use Case

The API is particularly well-suited for structured data extraction from e-commerce, real estate, and travel aggregation sites. Its ability to return clean, parsed JSON data makes it ideal for price monitoring, market research, and lead generation tasks where data consistency is critical.

Pros:

Results-Based Billing: Costs are tied to successfully delivered results, making financial forecasting straightforward.

Dedicated Endpoints: Simplifies scraping popular, high-value targets by providing structured data output.

Excellent Support: Offers 24/7 live support and comprehensive documentation to assist with integration.

Cons:

Higher Costs for JS: Rendering JavaScript-heavy pages will increase the cost per result.

Plan-Based Rate Limits: Concurrency and request limits are tied to pricing tiers, which may require upgrades for high-volume needs.

5. Apify

Apify presents a unique, full-featured data collection platform rather than just a simple web scraping API. It provides a serverless environment where developers can build, run, and scale "Actors"—cloud programs that can perform any web scraping or automation task. This model is ideal for teams building complex data workflows or those who prefer leveraging a marketplace of pre-built scrapers for common targets.

The platform’s strength lies in its flexibility. You can either deploy custom code using their client SDKs or find a ready-made solution in the Apify Store, saving significant development time. The serverless compute model, billed through Compute Units (CUs), abstracts away infrastructure management. This allows you to focus on the data logic while Apify handles scaling, proxy management, and headless browser execution behind the scenes.

Key Features & Use Case

Apify excels in scheduled, recurring data extraction tasks and complex automations that require more than just fetching raw HTML. It is a powerful choice for integrating scraped data into larger business workflows, thanks to features like built-in data storage, webhooks, and scheduling. The platform's ecosystem supports building sophisticated data pipelines from start to finish.

Pros:

Highly Flexible: Build custom scrapers or use hundreds of pre-built Actors from its marketplace.

Integrated Platform: Combines compute, proxies, storage, and scheduling in one place.

Transparent Pricing: The Compute Unit model is clear, and a generous free tier is available for testing.

Cons:

Potential for Overkill: Its feature set may be too complex for simple URL-to-HTML scraping tasks.

Cost Monitoring Required: The pay-as-you-go CU model requires active monitoring to manage costs effectively.

Website: https://apify.com/

6. ScraperAPI

ScraperAPI offers a straightforward and powerful HTTP endpoint designed to simplify the web scraping process for developers. The service manages an extensive pool of rotating proxies, including datacenter, residential, and mobile IPs, alongside headless browser rendering and CAPTCHA handling. It is an excellent choice for teams looking for a plug-and-play solution that allows them to focus on parsing data rather than managing infrastructure.

What makes ScraperAPI one of the best web scraping API options is its emphasis on developer experience. The onboarding is seamless, with clear documentation, a generous 7-day trial offering 5,000 credits, and an intuitive analytics dashboard for monitoring usage. The platform also provides advanced features like asynchronous scraping for large jobs and structured JSON endpoints for specific sites like Amazon, making it highly versatile for various data extraction needs.

Key Features & Use Case

The API is well-suited for a wide range of applications, from e-commerce price monitoring to real estate data aggregation and SERP tracking. Its country-level geotargeting and mix of proxy types allow it to bypass most anti-bot systems effectively. The combination of ease of use and scalability makes it ideal for startups and mid-sized companies that need a reliable data pipeline without a large upfront investment.

Pros:

Developer-Friendly Onboarding: Excellent documentation and a simple API structure make integration fast.

Competitive Rates: Offers good value, especially at mid-tier subscription levels.

Good Analytics: The dashboard provides clear insights into usage, success rates, and concurrency.

Cons:

Plan-Based Restrictions: Entry-level plans may have limitations on geotargeting and premium proxy access.

Premium Credit Usage: Scraping JavaScript-heavy targets can consume premium credits, increasing costs.

Website: https://www.scraperapi.com/

7. ScrapingBee

ScrapingBee is a developer-focused web scraping API designed for simplicity and quick integration. It packages essential features like headless browser rendering, premium rotating proxies, and session management into a single API call, making it an excellent choice for developers and small teams who need to get up and running quickly. The API aims to handle the complexities of scraping, allowing users to focus on data extraction rather than infrastructure management.

What makes ScrapingBee stand out is its straightforward, credit-based pricing and a generous free tier of 1,000 API calls, which is perfect for prototyping and testing. The platform also offers unique features like a dedicated Google Search API endpoint and the ability to define custom data extraction rules, which can return structured JSON data directly. This combination of accessibility and powerful features makes it a strong contender for projects that don't require massive enterprise-scale concurrency but need a reliable and easy-to-use scraping solution.

Key Features & Use Case

The platform is particularly well-suited for scraping modern websites built with JavaScript frameworks like React or Vue, as well as for automating tasks like SERP data collection or taking full-page screenshots. Its simple API parameters make it easy to enable JavaScript rendering or select a specific geographic location for your requests.

Pros:

Simple Pricing and Quick Start: Transparent, credit-based pricing and a free trial make it easy for developers to start.

Helpful Documentation: Clear and concise documentation with code examples in multiple languages.

Built-in Data Extraction: Ability to set custom rules to parse and return structured JSON data.

Cons:

Concurrency Limits: Lower-tier plans have caps on concurrent requests, which may slow down large-scale scraping.

Cost for Difficult Targets: Scraping heavily protected sites can consume credits quickly, potentially requiring higher-priced plans.

Website: https://www.scrapingbee.com/

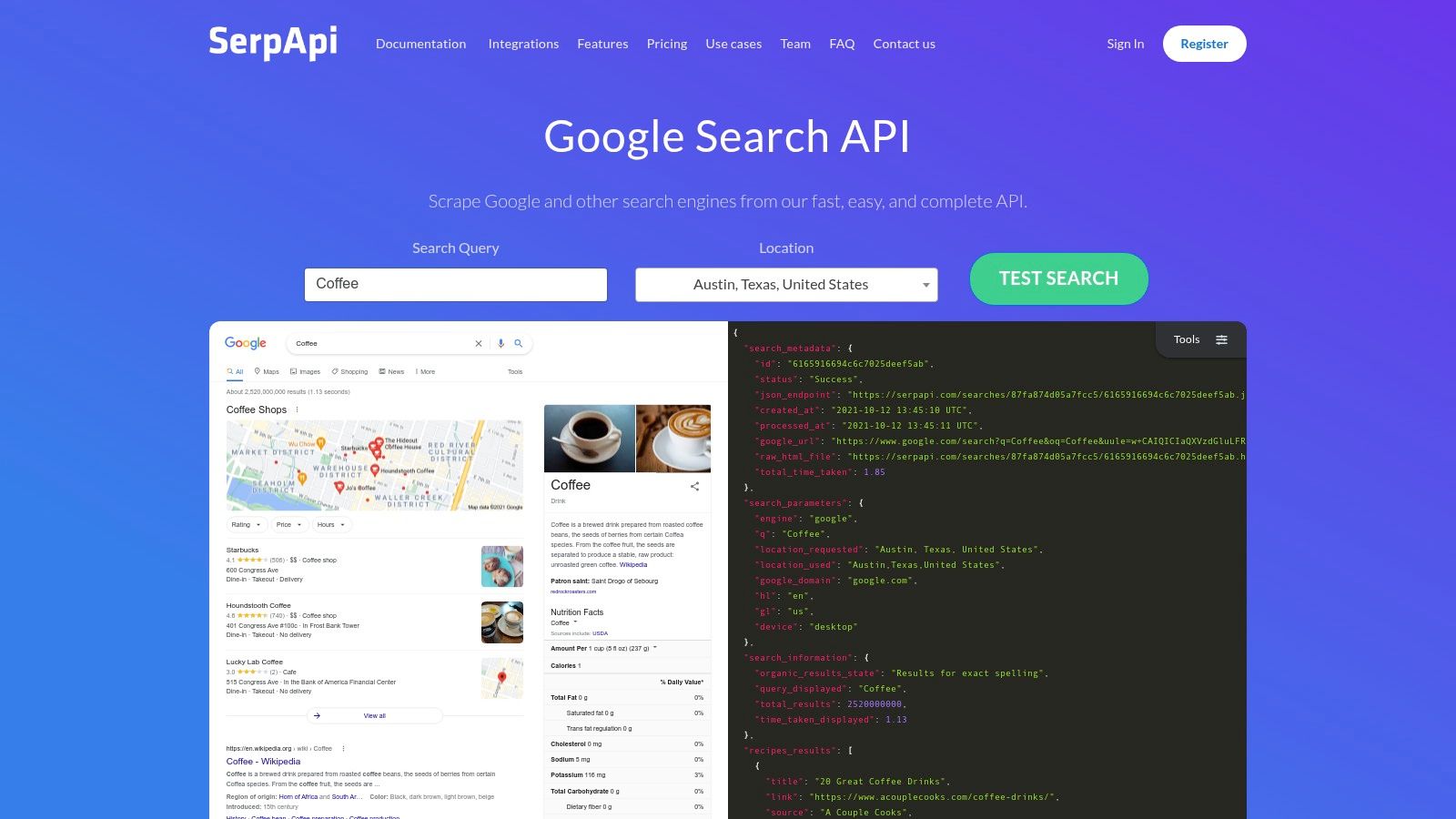

8. SerpAPI

SerpAPI distinguishes itself by focusing exclusively on a single, complex scraping challenge: search engine results pages (SERPs). Instead of being a general-purpose web scraping API, it provides a highly reliable, structured data feed directly from Google, Bing, Baidu, and other search engines. This specialization allows it to deliver parsed JSON results for web, image, news, and shopping queries with exceptional speed and accuracy, abstracting away the significant anti-bot measures search engines deploy.

The platform is designed for users who need consistent and dependable SERP data for SEO monitoring, rank tracking, or competitive analysis. It manages all the complexities of location-specific searches, proxy management, and CAPTCHA solving behind the scenes. With comprehensive SDKs for major programming languages and a commitment to only billing for successful searches, SerpAPI offers a premium, hassle-free solution for a very specific but critical data-gathering need.

Key Features & Use Case

SerpAPI is the go-to tool for marketing agencies, SEO platforms, and any business that relies on timely and accurate search engine data. Its ability to handle location-specific queries and various search types (like Google Maps or Shopping) makes it invaluable for hyperlocal and e-commerce intelligence.

Pros:

Highly Reliable SERP Data: Extremely high success rates for a difficult scraping target.

Fast Performance: Delivers parsed JSON results quickly, often in under a few seconds.

Excellent Tooling: Offers robust SDKs, a live playground, and strong customer support.

Cons:

Niche Focus: Not suitable for general-purpose web scraping outside of search engines.

Premium Pricing: Can be more expensive than general APIs, especially for high-volume needs.

Website: https://serpapi.com/

9. DataForSEO

DataForSEO positions itself not as a general-purpose scraper, but as a specialized suite of APIs focused on providing structured SEO and marketing data. It offers an extensive catalog of endpoints for search engine results pages (SERPs), keyword data, on-page analysis, and e-commerce platforms like Amazon. This makes it a powerful tool for marketing agencies, SEO professionals, and market researchers who need reliable, structured data without building parsers from scratch.

Its pure pay-as-you-go pricing model is a major differentiator. Users deposit funds and pay per request, with no subscriptions or monthly commitments required. The platform also offers different task queues (Standard, Priority, Live) with varying speeds and costs, allowing users to balance budget with urgency. While it's not the ideal general-purpose web scraping API for arbitrary websites, it excels at its core function of delivering high-volume SEO and e-commerce data at a predictable, granular cost.

Key Features & Use Case

DataForSEO is the go-to solution for high-volume, cost-sensitive projects centered on market intelligence and SEO analytics. Its extensive endpoint library, covering everything from Google Trends to Amazon product reviews, provides a one-stop shop for structured marketing data, backed by a free sandbox environment for testing.

Pros:

Granular PAYG Pricing: The deposit-based, per-request model offers extreme cost predictability and control.

Extensive SEO Endpoints: A huge catalog of specialized APIs for SERPs, keywords, and market data.

Scalable and Reliable: Designed to handle massive volumes of requests with 24/7 support.

Cons:

Not a General Scraper: It cannot be used to scrape arbitrary HTML from any website.

Queue-Based System: The standard queue can introduce delays, making it less suitable for real-time needs.

Website: https://dataforseo.com/

10. Smartproxy – Web Scraper API and Site Unblocker

Smartproxy offers a flexible and powerful web scraping API solution designed for developers who need both raw HTML access and structured data from popular targets. The platform is uniquely split into two main products: a "Web Scraper API" with ready-made endpoints for over 100 common domains, and a "Site Unblocker" that acts as a smart proxy for any custom target, handling anti-bot systems automatically.

What sets Smartproxy apart is its dual billing approach, offering both per-request pricing for its structured data endpoints and a per-GB model for its Site Unblocker. This flexibility allows teams to choose the most cost-effective plan based on their project's specific needs, whether it's high-volume scraping of common sources or tackling difficult custom websites. With global geotargeting and robust session management, it stands as a strong contender among the best web scraping API services.

Key Features & Use Case

The Web Scraper API is ideal for projects focused on popular e-commerce sites like Amazon, search engines like Google, or social platforms like YouTube, as it delivers clean JSON output with minimal setup. The Site Unblocker is better suited for developers building custom scrapers that need reliable, unblocked access to any URL while managing their own parsing logic.

Pros:

Practical Endpoints: Ready-made endpoints for over 100 popular websites simplify data extraction significantly.

Flexible Billing: Offers both per-request and per-GB pricing models to suit different use cases.

Solid Documentation: Provides clear examples and comprehensive guides to get started quickly.

Cons:

Confusing Pricing Pages: The separation of products and regional variations can make pricing difficult to navigate.

Requires Usage Tracking: The per-GB model for the Site Unblocker requires careful monitoring to control costs effectively.

11. Crawlbase (formerly ProxyCrawl)

Crawlbase, previously known as ProxyCrawl, provides a suite of tools centered around its core Crawling API, designed for straightforward and reliable data extraction. The platform is built for developers who need a simple URL-to-HTML solution that handles all the underlying complexities of proxy management and anti-bot evasion. Its approach simplifies the initial stages of a scraping project, making it accessible for quick proofs-of-concept and smaller-scale tasks.

The standout feature of this web scraping API is its billing model, which is based on success and target complexity. Crawlbase offers a pricing estimator to help forecast costs, and users are only charged for successful requests. This model, combined with a generous free tier of 1,000 requests without requiring a credit card, lowers the barrier to entry for developers looking to test their scraping ideas without initial investment. This makes it an excellent choice for ad hoc data gathering.

Key Features & Use Case

Crawlbase is particularly useful for developers needing a flexible mix of services, offering not just a crawling API but also a smart AI proxy and data storage options. Its API is effective for retrieving data from standard websites, with separate modes for non-JavaScript and JavaScript-heavy targets. The managed enterprise crawler option also provides a path for teams to scale their operations without managing the infrastructure themselves.

Pros:

Simple to Start: The free tier and transparent cost calculator make it easy to begin scraping immediately.

Flexible Offerings: Provides a mix of APIs, a smart proxy, and storage to fit different project needs.

Success-Only Billing: You only pay for successful requests, which de-risks initial development and testing.

Cons:

Unpredictable Costs: Per-site complexity pricing can make it difficult to forecast budgets for new or varied targets.

Higher JS Cost: Scraping heavily protected or JavaScript-intensive websites costs significantly more per request.

Website: https://crawlbase.com/

12. RapidAPI

RapidAPI isn't a single web scraping API but a massive API marketplace where developers can discover, test, and subscribe to thousands of APIs, including a vast selection of web scraping tools. It functions as a centralized hub, allowing you to find everything from general-purpose scrapers to highly specialized APIs for social media, e-commerce, or real estate data extraction. This makes it an excellent starting point for projects requiring niche data or for quickly comparing different providers.

The platform's key advantage is its unified interface. You can manage multiple API subscriptions, API keys, and billing from a single account. Each listing includes in-browser testing capabilities, documentation, endpoints, and code snippets in various languages, significantly lowering the barrier to entry for trying a new service. This makes RapidAPI one of the most efficient ways to evaluate a potential best web scraping api for a specific, one-off task without committing to a direct vendor contract.

Key Features & Use Case

RapidAPI is ideal for developers who need to quickly find and integrate a specialized scraping solution for a smaller project or for those who want to prototype with several APIs before selecting a long-term partner. It excels in discovery and rapid implementation, saving significant time that would otherwise be spent on individual vendor research and account setup.

Pros:

Fast Discovery and Testing: A single platform to find, compare, and test a wide variety of scraping APIs.

Centralized Management: Unified billing and API key management simplifies administration for multiple services.

Niche Scrapers: Access to specialized and hard-to-find APIs for specific data extraction needs.

Cons:

Variable Quality: The quality and reliability of APIs can vary greatly between different providers on the marketplace.

Indirect Relationship: For mission-critical, large-scale operations, a direct relationship with a dedicated scraping API vendor is often preferable for support and SLAs.

Website: https://rapidapi.com/

Top 12 Web Scraping APIs Comparison

Product | Core features ✨ | Quality & support ★ | Pricing & value 💰 | Best for 👥 |

|---|---|---|---|---|

🏆 ScrapeUnblocker | Real browsers + JS rendering, premium rotating residential proxies, city-level targeting, unlimited concurrency | ★★★★★ — high accuracy, priority support, quality backstop | 💰 Simple per-request (1 call = 1 request); predictable plans & custom limits | 👥 Data teams needing reliable scraping of modern SPAs |

Zyte – Web Scraping API | Headless rendering, proxy mgmt, CAPTCHA handling, SDKs | ★★★★☆ — mature platform, strong docs & retries | 💰 Success-based billing; good for hard targets but can be complex to forecast | 👥 Teams tackling difficult JS targets at scale |

Bright Data – Web Scraper API | Massive IP pool, autonomous unlocker, Browser API, enterprise features | ★★★★☆ — enterprise-grade scale & SLAs | 💰 Record/GB tiers; feature-rich but can be pricier at low volumes | 👥 Large enterprises and high-volume crawlers |

Oxylabs – Web Scraper API | JS rendering toggle, parsed JSON, dedicated endpoints (Amazon/Google) | ★★★★ — reliable onboarding, 24/7 support | 💰 Per-1,000-results pricing; predictable for result-based billing | 👥 Teams needing dedicated endpoints for popular platforms |

Apify | Hosted headless browsers, Actor marketplace, serverless CUs, scheduling | ★★★★ — flexible platform, good tooling & free tier | 💰 Compute-unit (CU) billing; free plan to prototype | 👥 Builders of workflows, automations, and reusable scrapers |

ScraperAPI | Rotating IPs, JS rendering, geotargeting, async & analytics | ★★★★ — developer-friendly onboarding & dashboard | 💰 Competitive mid-tier rates; 7-day trial with credits | 👥 Developers wanting plug-and-play URL-to-HTML endpoints |

ScrapingBee | Built-in rendering, premium proxies, extraction rules, screenshots | ★★★★ — simple setup, clear docs | 💰 Simple pricing; free 1,000-call trial | 👥 Small teams and fast prototypes |

SerpAPI | Multi-engine SERP coverage, location/language params, SDKs | ★★★★★ — highly reliable SERP data, fast SLAs | 💰 Monthly plans; premium for enterprise SLAs | 👥 SERP-focused analytics and SEO platforms |

DataForSEO | PAYG SEO & SERP endpoints, multiple queues (Standard/Priority/Live) | ★★★★ — scalable, well-documented for SEO tasks | 💰 Very low per-request PAYG; deposit-based usage | 👥 Cost-sensitive, high-volume SEO & market-data projects |

Smartproxy – Web Scraper API | Ready JSON endpoints for 100+ domains, session mgmt, unblocking | ★★★★ — predictable plans, good docs | 💰 Per-request or per-GB depending on SKU; region variance | 👥 Teams needing ready-made endpoints for common sites |

Crawlbase (formerly ProxyCrawl) | Complexity-based estimator, JS modes, free starter tier, success-only billing | ★★★★ — transparent calculator & easy start | 💰 Free 1,000 requests; pay-only-on-success model | 👥 Developers prototyping quick URL-to-HTML extraction |

RapidAPI | Marketplace of scraping APIs, unified billing, in-browser testing | ★★★ — fast discovery; vendor quality varies | 💰 Centralized billing; pricing varies by listing | 👥 Users evaluating/trying multiple niche scrapers quickly |

Making Your Final Choice: Which API Is Right for You?

Navigating the crowded market of web scraping APIs can feel overwhelming. We've journeyed through 12 of the industry's leading solutions, dissecting their features, strengths, and ideal use cases. The central takeaway is clear: the best web scraping API is not a one-size-fits-all product. Instead, it's the one that aligns perfectly with your project's technical requirements, scale, and budget.

Your final decision hinges on a careful evaluation of your specific needs. Are you building a simple price tracker for a single e-commerce site, or are you architecting a large-scale data pipeline to feed a machine learning model? The answer dictates which features you should prioritize.

A Quick Recap for Your Decision Matrix

To simplify your choice, let's distill the key strengths of the different categories we've explored:

For Specialized Data Needs: If your primary goal is extracting structured data from search engines like Google, services like SerpAPI and DataForSEO are purpose-built for the job. They handle the complexities of SERP parsing, delivering clean, reliable data for SEO and marketing analytics.

For Complex Automation and Workflows: When your project goes beyond simple data extraction and requires multi-step automation, a platform like Apify is unparalleled. Its actor-based model provides the flexibility to build and run intricate scraping workflows in the cloud.

For General-Purpose, All-in-One Solutions: For the majority of scraping tasks that involve dynamic websites, JavaScript rendering, and sophisticated anti-bot measures, a comprehensive API is essential. Tools from Bright Data, Oxylabs, and ScraperAPI offer powerful, feature-rich platforms designed to tackle these challenges head-on.

Why ScrapeUnblocker Stands Out

While many services offer a broad range of features, ScrapeUnblocker distinguishes itself by excelling in the areas that matter most for modern web scraping: reliability, simplicity, and developer experience. It was engineered specifically to handle the toughest targets, using real browsers and a premium residential proxy network to bypass even the most advanced anti-bot systems.

What truly sets it apart is the commitment to a transparent and predictable model. By moving away from complex credit systems in favor of a straightforward, per-successful-request pricing structure, ScrapeUnblocker removes the guesswork. This allows development teams to forecast costs accurately and focus their energy on leveraging the data they acquire, rather than wrestling with the infrastructure to get it.

With its robust features like unlimited concurrency and precise geo-targeting down to the city level, ScrapeUnblocker emerges as a powerful and scalable contender for the title of best web scraping API. It's an ideal choice for businesses that demand high-quality, accurate data without the operational overhead, from e-commerce price intelligence to large-scale data aggregation for AI training.

Ultimately, the right tool empowers you to achieve your goals efficiently and reliably. Consider your project's scope, the technical difficulty of your target sites, and your long-term scalability needs. Use this guide as your starting point, run trials, and choose the partner that will best support your data acquisition strategy.

Ready to experience a web scraping API built for performance and simplicity? Sign up for a free trial at ScrapeUnblocker and see how our powerful anti-block technology and developer-first approach can streamline your data extraction projects. Stop fighting with blocks and start getting the data you need today.

Comments