Top 12 Best Proxies for Web Scraping in 2025

- John Mclaren

- Nov 10, 2025

- 21 min read

Web scraping is a data-driven superpower, but it's only as good as its weakest link: the proxy. The right proxy service determines whether you get clean, reliable data or a frustrating wall of CAPTCHAs, IP bans, and failed requests. With countless providers, confusing pricing models, and various IP types, choosing the best proxies for web scraping can feel like navigating a minefield. This guide cuts through the noise and provides a definitive, actionable roadmap.

We'll break down the critical differences between residential, datacenter, ISP, and mobile proxies, explaining exactly when and why to use each type. You will get a deep dive into the top 12 proxy providers and platforms, from raw IP networks to fully integrated scraping APIs that handle everything for you. Each option is analyzed based on real-world performance, target site success rates, integration complexity, and overall value.

This comprehensive resource is designed to help you make an informed decision quickly. We compare features, geo-targeting capabilities, and pricing structures side-by-side, complete with direct links and illustrative screenshots. Whether you're a developer building a custom scraper, a data scientist needing massive datasets, or an e-commerce analyst monitoring competitor pricing, this list will help you find the perfect solution for your specific use case. Forget the marketing hype; let's focus on what truly works for collecting data at scale.

1. ScrapeUnblocker

Best For: All-in-One Scraping Infrastructure API

ScrapeUnblocker distinguishes itself by moving beyond a simple proxy service. It offers a fully integrated web scraping infrastructure API designed to tackle the most challenging data extraction targets. This platform consolidates premium rotating residential proxies with a sophisticated, real-browser rendering engine, making it an exceptionally powerful choice for developers who need reliable access to dynamic, JavaScript-heavy websites. Its core value is simplifying the entire unblocking stack into a single API call, eliminating the need to manage separate proxy, browser rendering, and CAPTCHA-solving services.

This comprehensive approach makes ScrapeUnblocker one of the best proxies for web scraping solutions when total cost of ownership and developer efficiency are paramount. Instead of wrestling with complex proxy rotation logic and anti-bot countermeasures, engineers can focus on data processing and analysis. The platform’s ability to deliver either raw HTML or structured JSON, combined with unlimited concurrency and city-level geotargeting, provides the flexibility and scale required for demanding projects.

Key Features and Strengths

ScrapeUnblocker’s architecture is built for resilience and high success rates against modern anti-bot systems.

Integrated Anti-Blocking Stack: It combines real browser execution, full JavaScript rendering, and a premium rotating residential proxy network to mimic human behavior effectively. This significantly increases success rates against targets like e-commerce marketplaces, social media platforms, and SERPs.

Simplified Pricing Model: The platform uses a straightforward per-successful-request billing system. This avoids the confusing credit-based models common in the industry, making budget forecasting predictable and transparent. You only pay for successful data retrieval.

Developer-Centric Experience: With a sandbox environment, comprehensive documentation, and priority support, ScrapeUnblocker focuses on accelerating integration. The company also offers an accuracy guarantee, promising assistance or waived charges if data quality does not meet standards.

Practical Use Cases

E-commerce & Price Intelligence: Extracting product details, pricing, and stock levels from heavily protected online retail sites.

SERP & SEO Monitoring: Gathering accurate search engine results pages from specific geographic locations for rank tracking and keyword analysis.

AI/ML Data Collection: Aggregating large, clean datasets from diverse web sources to train machine learning models.

Feature | ScrapeUnblocker | Traditional Proxy Provider |

|---|---|---|

Service Type | Integrated Scraping API | Proxy-as-a-Service |

Core Function | Proxies + JS Rendering + Anti-Bot | Proxy IP Rotation |

Pricing | Per successful request | Per GB/IPs/Credits |

Concurrency | Unlimited | Often limited by plan |

Target Sites | Dynamic, high-security sites | General purpose |

While ScrapeUnblocker excels in simplifying complex scraping tasks, potential users should note the absence of public per-request pricing and independent testimonials on its site. It's advisable to contact their sales team for precise rates and to conduct a trial in the sandbox. As with any scraping tool, users remain responsible for ensuring their activities comply with the target website's terms of service and all relevant legal regulations.

Website: https://www.scrapeunblocker.com

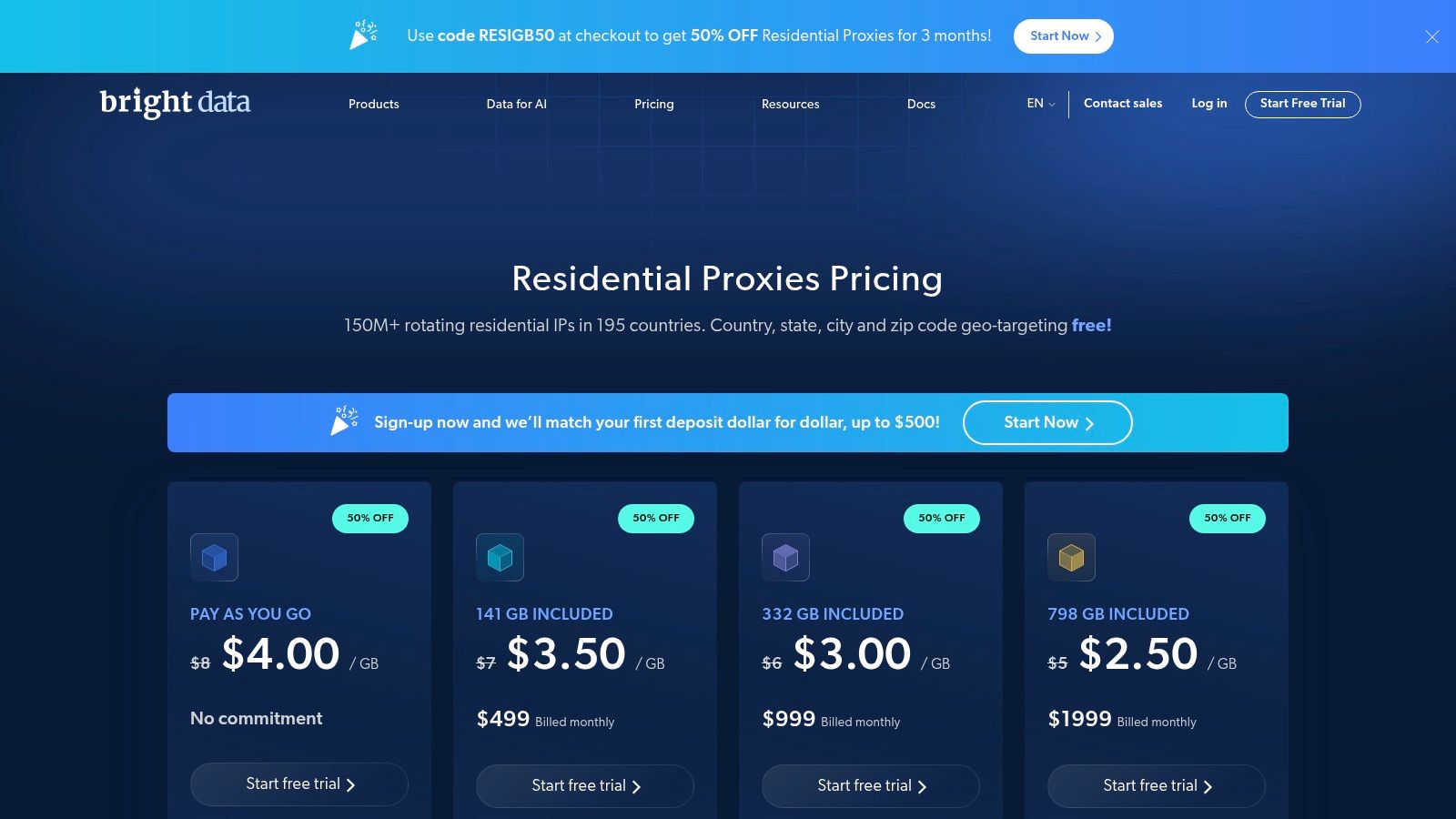

2. Bright Data

Bright Data positions itself as an enterprise-grade web data platform, and its proxy infrastructure is a core component of that offering. It provides one of the largest and most diverse proxy networks available, making it a top contender for large-scale, global web scraping operations that demand precision and reliability. The platform is particularly suited for teams needing granular control over IP addresses, down to the city, ZIP code, or even carrier level.

This provider stands out for its sheer scale, boasting over 150 million residential IPs across more than 195 countries. This extensive coverage is crucial for projects requiring localized data, such as e-commerce price monitoring or ad verification across different regions. For complex projects, their free Proxy Manager tool offers advanced rule-based routing and performance monitoring, helping to optimize costs and success rates. Explore how to leverage these large IP pools effectively in our deep dive on rotating proxies for web scraping.

Key Features and Considerations

Bright Data’s offerings are extensive, but it's important to understand the trade-offs.

Product Breadth: Access to residential, mobile, ISP (static residential), and datacenter proxies provides flexibility for any scraping scenario.

Targeting Precision: Advanced geo-targeting capabilities allow for highly specific data collection, which is a significant advantage over many competitors.

Onboarding Process: Be prepared for a Know Your Customer (KYC) verification process. This compliance step, while adding friction, ensures ethical use of the network.

Pricing Structure: The pay-as-you-go (PAYG) model is excellent for testing, but costs can escalate quickly with usage. Committed monthly plans offer better value for consistent, high-volume scraping.

Feature | Details |

|---|---|

Proxy Pool Size | 150M+ Residential IPs |

Geo-Targeting | Country, City, ZIP, Carrier, ASN |

Primary Use Cases | Large-scale data aggregation, ad verification, SEO |

Best For | Enterprises, data-heavy startups |

Starting Price | $10/GB (Residential PAYG) |

Unique Tooling | Free Proxy Manager, Browser Extension |

Overall, Bright Data is one of the best proxies for web scraping when budget is secondary to scale, performance, and granular control. However, its enterprise focus and pricing may be overkill for smaller projects or individual developers.

Website: brightdata.com

3. Oxylabs

Oxylabs is a premium proxy solutions provider that caters to both self-service users and large-scale enterprises. It has built a reputation for high performance and reliability, offering a comprehensive suite of proxy products, including advanced Scraper APIs designed to handle complex anti-bot measures. This makes it an excellent choice for developers who need robust infrastructure with clear, predictable pricing for smaller to mid-sized projects, while still offering the power required for enterprise-level data extraction.

The platform is particularly strong for its wide product portfolio, which includes a massive pool of over 100 million residential IPs. Its ISP proxies are a standout feature, providing the speed of datacenter IPs with the legitimacy of residential ones, perfect for tasks requiring long, persistent sessions. The clear self-service checkout process and extensive documentation lower the barrier to entry for smaller teams, allowing them to get started quickly without lengthy sales consultations.

Key Features and Considerations

Oxylabs offers a balanced approach, combining ease of use with powerful, enterprise-grade features.

Diverse Product Suite: Offers residential, mobile, ISP (static residential), and datacenter proxies, plus dedicated Web Unblocker and Scraper APIs.

Self-Service Accessibility: The clear pricing and self-serve options for many plans are a major plus for developers and small teams who want to get started without friction.

High Performance: Claims an industry-leading 99.9% uptime, ensuring scraper reliability for mission-critical data gathering operations.

Pricing Tiers: While the pay-as-you-go model is convenient, it's capped at 50GB per month for residential proxies. Scaling beyond this requires moving to a committed plan, which often involves contacting their sales team.

Feature | Details |

|---|---|

Proxy Pool Size | 100M+ Residential IPs |

Geo-Targeting | Country, City, State, ASN |

Primary Use Cases | Market research, ad verification, e-commerce scraping |

Best For | Mid-sized businesses, developers, enterprise teams |

Starting Price | $8/GB (Residential PAYG) |

Unique Tooling | Scraper APIs, Proxy Rotator, Extensive Documentation |

Ultimately, Oxylabs provides one of the best proxies for web scraping by successfully bridging the gap between user-friendliness and industrial-strength performance. It’s an ideal choice for users who value high success rates and a wide range of proxy types but prefer a straightforward onboarding experience.

Website: oxylabs.io/pricing

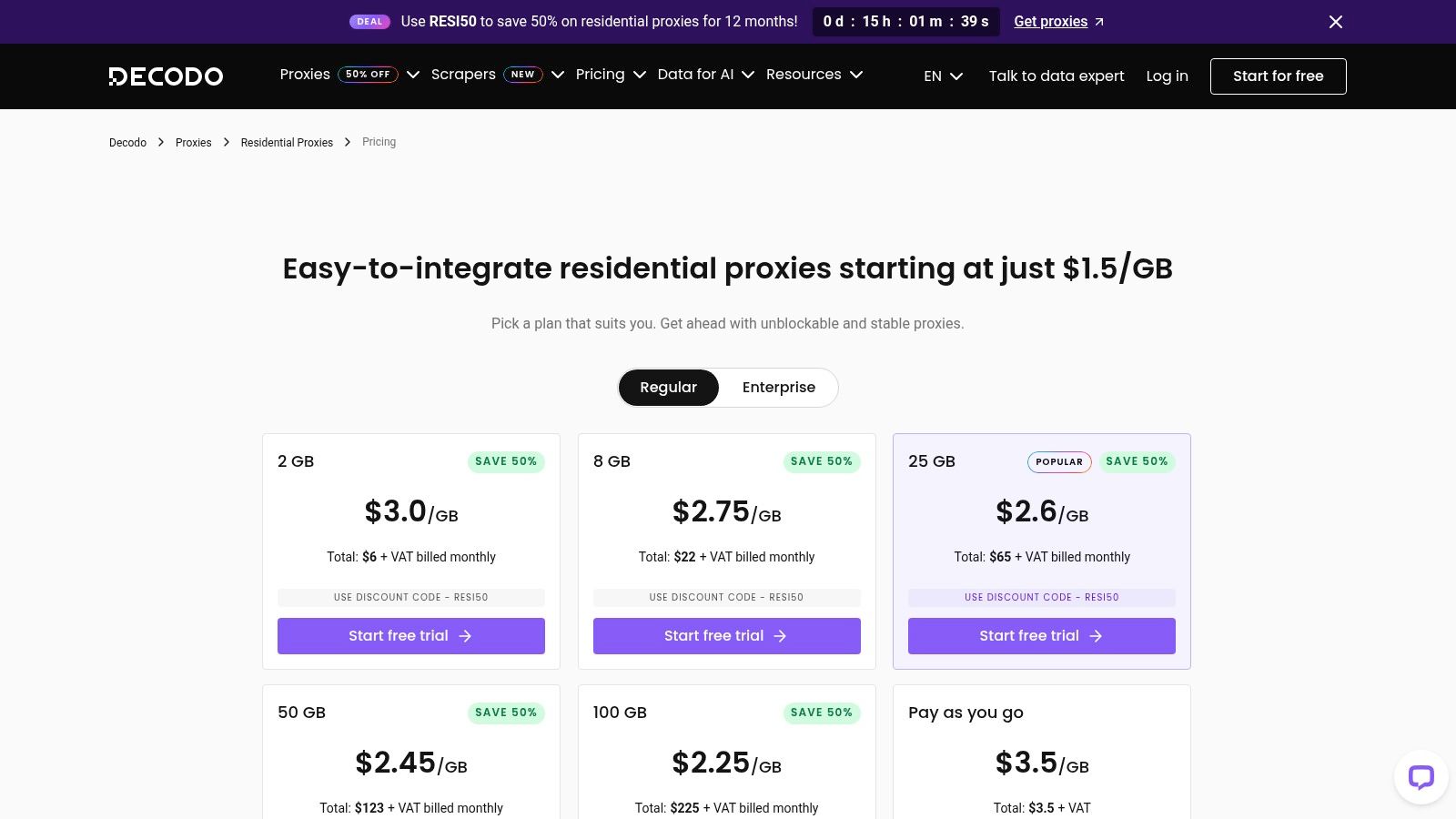

4. Decodo (formerly Smartproxy)

Decodo, which recently rebranded from the well-known Smartproxy, carves out a niche by offering robust proxy solutions tailored for small to medium-sized businesses (SMBs) and individual developers. It strikes an excellent balance between performance, usability, and cost-effectiveness, making advanced proxy features accessible without the enterprise-level price tag. The platform is celebrated for its straightforward user dashboard and quick onboarding, allowing users to get scraping projects up and running in minutes.

With a substantial pool of over 115 million residential IPs, Decodo provides both rotating and sticky session options, giving developers flexibility for various scraping tasks. For example, its sticky sessions are ideal for managing social media accounts or navigating multi-step checkout processes. For those targeting social platforms, understanding how to configure these sessions is key; you can explore this further in our guide to using residential proxies for Instagram. The platform’s focus on a smooth user experience, backed by 24/7 support, makes it a strong contender for teams that prioritize ease of use.

Key Features and Considerations

Decodo is a powerful option, but it's important to understand its specific strengths and the implications of its recent brand change.

User-Centric Design: The interface is exceptionally clean and intuitive, significantly lowering the barrier to entry for those new to proxy management.

Flexible Sessions: Offers both rotating IPs for broad data collection and sticky IPs (up to 30 minutes) for tasks requiring session persistence.

Aggressive Pricing: Often runs promotions and offers competitive pay-as-you-go and subscription plans, appealing to budget-conscious users.

Brand Transition: The shift from Smartproxy to Decodo may cause some initial confusion for returning users, though the core service remains the same high quality.

Feature | Details |

|---|---|

Proxy Pool Size | 115M+ Residential IPs |

Geo-Targeting | Country, City, ZIP, ASN |

Primary Use Cases | E-commerce scraping, ad verification, social media |

Best For | SMBs, individual developers, freelancers |

Starting Price | $7/GB (Residential PAYG) |

Unique Tooling | User-friendly dashboard, Chrome/Firefox extensions |

Overall, Decodo offers some of the best proxies for web scraping for users who need a powerful, reliable, and easy-to-use solution without a complex setup. Its competitive pricing and strong feature set make it an excellent choice for growing businesses and developers.

5. Zyte (Smart Proxy Manager / Zyte API)

Zyte shifts the proxy paradigm from managing infrastructure to focusing on successful data extraction. It offers an API-first managed unblocking and proxy automation layer, ideal for developers who want to offload the complexities of anti-bot bypass and IP rotation. Instead of billing per gigabyte of traffic, Zyte's model charges per successful request, aligning costs directly with results and providing predictable scraping economics.

This approach is particularly powerful for tackling heavily protected websites where a DIY proxy setup would require constant trial and error. By handling everything from proxy selection and rotation to fingerprinting and CAPTCHA solving, Zyte allows teams to focus on parsing the data rather than accessing it. The optional browser rendering feature is crucial for JavaScript-heavy pages, ensuring complete data capture from dynamic sites that are notoriously difficult to scrape.

Key Features and Considerations

Zyte’s managed service model offers significant benefits but trades direct control for automated success.

Success-Based Pricing: You only pay for successful requests, which eliminates wasted budget on failed connections and blocked IPs. This is a game-changer for projects with tight margins.

Automated Unblocking: The service automatically handles ban detection, retries, and proxy rotation, abstracting away the most time-consuming parts of web scraping.

API-First Model: As a fully managed API, it abstracts the underlying proxy infrastructure. This means less granular control over specific IPs compared to traditional providers.

Cost Efficiency: While potentially more expensive for simple, unprotected websites, it often proves more cost-effective for complex targets by minimizing development time and failed request costs.

Feature | Details |

|---|---|

Proxy Pool Size | Managed and automated (not publicly specified) |

Geo-Targeting | Available through API parameters |

Primary Use Cases | E-commerce, real estate data, news aggregation |

Best For | Teams wanting a hands-off, results-driven solution |

Starting Price | $25/month for 50k successful API requests |

Unique Tooling | Integrated browser rendering, automated ban handling |

Ultimately, Zyte is one of the best proxies for web scraping when the primary goal is reliable data extraction from difficult targets with predictable costs. It is less suited for users who require direct, low-level control over their proxy infrastructure.

Website: https://www.zyte.com/pricing/

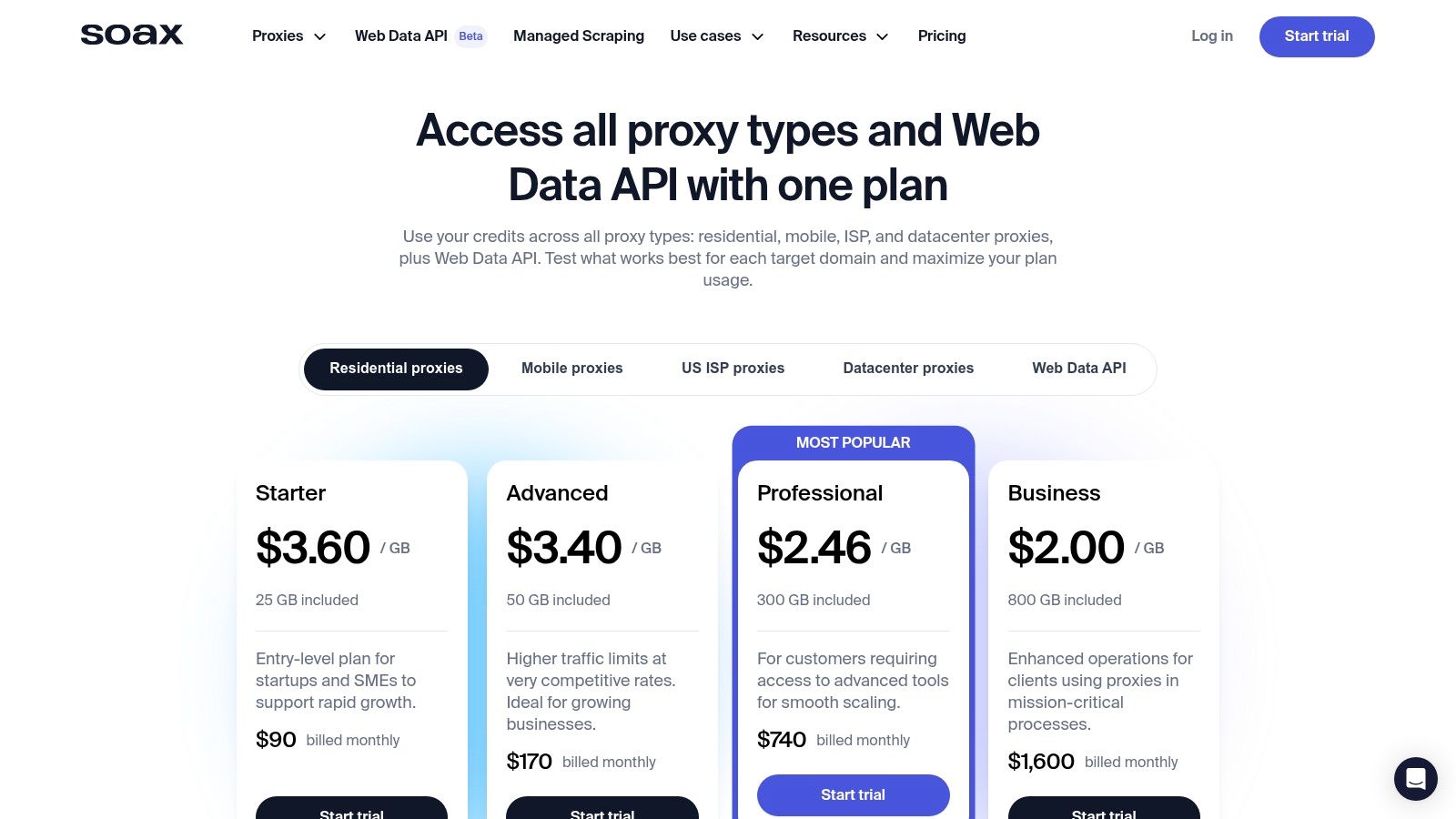

6. SOAX

SOAX enters the market with a compelling, flexible model designed for startups and growing teams that need access to various proxy types without committing to separate plans. Its unified credit system allows users to seamlessly switch between residential, mobile, ISP, and datacenter proxies, offering a versatile solution for dynamic web scraping needs. This flexibility is particularly valuable for projects that require testing different IP types to find the most effective and cost-efficient approach for a specific target.

The platform boasts an impressive pool of over 155 million residential IPs and extensive geo-targeting capabilities across more than 195 countries. This makes it a strong contender for tasks like localized e-commerce price tracking and ad verification. SOAX also provides a low-cost trial for just $1.99, giving developers a chance to validate its performance and targeting precision before making a larger investment, which is a significant advantage for those carefully managing their budget.

Key Features and Considerations

SOAX’s main appeal lies in its pricing model and broad IP coverage, but it's important to weigh the trade-offs.

Unified Credit System: A single subscription provides credits that can be used across all proxy types, simplifying budget management and resource allocation.

Broad Protocol Support: Offers support for HTTP(S), SOCKS5, UDP, and QUIC, accommodating a wide range of scraping applications and protocols.

Paid Trial: The $1.99 trial provides a low-risk entry point but is limited to 400MB of traffic, which may be insufficient for comprehensive testing.

Session Stability: While sticky sessions are available, some users report that IPs in certain regions or on specific carriers may rotate more frequently than desired for long-duration tasks.

Feature | Details |

|---|---|

Proxy Pool Size | 155M+ Residential, 33M+ Mobile IPs |

Geo-Targeting | Country, Region, City, ISP |

Primary Use Cases | Ad verification, market research, price monitoring |

Best For | Startups, SMBs, developers needing flexibility |

Starting Price | $99/month (Residential); $1.99 for a 3-day trial |

Unique Tooling | Unified dashboard for managing different proxy types |

Overall, SOAX is one of the best proxies for web scraping for users who prioritize flexibility and cost-effectiveness. Its unified credit model is a standout feature, making it an excellent choice for teams experimenting with different strategies or scaling their data collection operations.

Website: soax.com

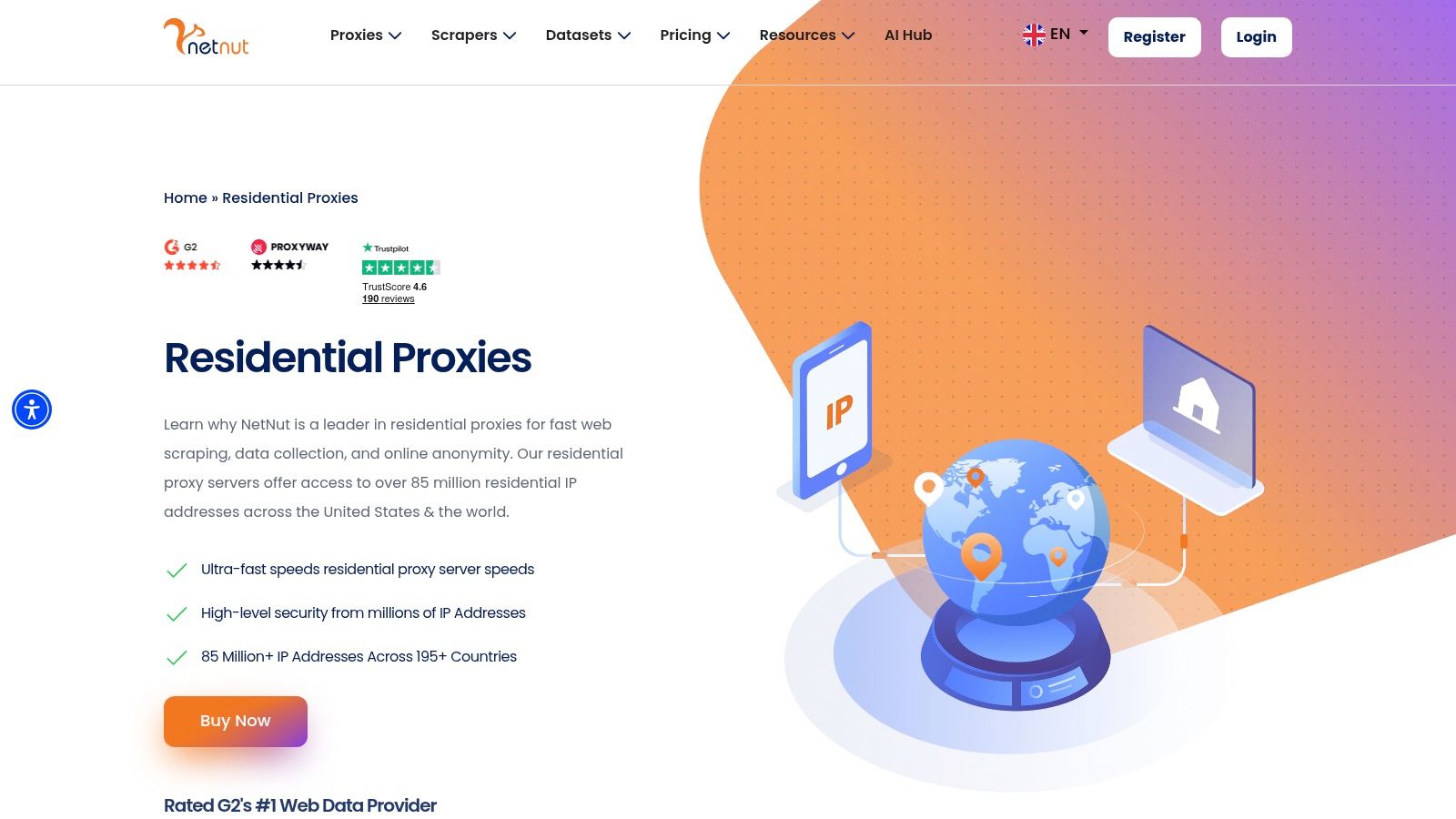

7. NetNut

NetNut is a premium proxy provider focused on delivering high-speed and stable residential IPs directly from ISPs, making it a strong choice for enterprise-level web scraping where performance is critical. It emphasizes speed and uptime, positioning its network as a reliable infrastructure for production workloads that cannot tolerate interruptions. The architecture is designed to minimize hops, leading to faster response times for data extraction tasks.

With a pool of over 85 million residential IPs, NetNut offers excellent global coverage. A key advantage for developers running multiple concurrent scrapers is the unlimited connections policy, which simplifies scaling without hitting account-level restrictions. This makes it particularly suitable for high-throughput projects like real-time price monitoring or large-scale market research where parallel requests are essential for efficiency.

Key Features and Considerations

NetNut's focus on performance and enterprise needs comes with specific trade-offs.

Direct ISP Connectivity: The network architecture is optimized for speed by sourcing IPs directly from ISPs, which can provide a performance edge over traditional P2P networks.

Scalable Tiers: The pricing is structured in clear, scalable tiers based on data usage, which suits businesses with predictable monthly requirements.

Concurrency: Unlimited concurrent connections and threads are a significant benefit for parallel scraping operations, removing a common bottleneck.

Sales-Gated Pricing: While some plans are listed, accessing details for higher-volume tiers or customized solutions often requires contacting the sales team, which can slow down the initial evaluation process.

Feature | Details |

|---|---|

Proxy Pool Size | 85M+ Residential IPs |

Geo-Targeting | Country, State, City |

Primary Use Cases | Real-time data collection, ad verification, brand protection |

Best For | Enterprises, high-volume scraping teams |

Starting Price | $300/20GB (Residential) |

Unique Tooling | Dedicated account managers, robust API access |

Overall, NetNut is one of the best proxies for web scraping for users who prioritize speed and reliability for consistent, high-volume tasks. Its straightforward, scalable plans and unlimited concurrency are ideal for production environments, though it may lack the pay-as-you-go flexibility that smaller projects require.

Website: netnut.io/residential-proxies/

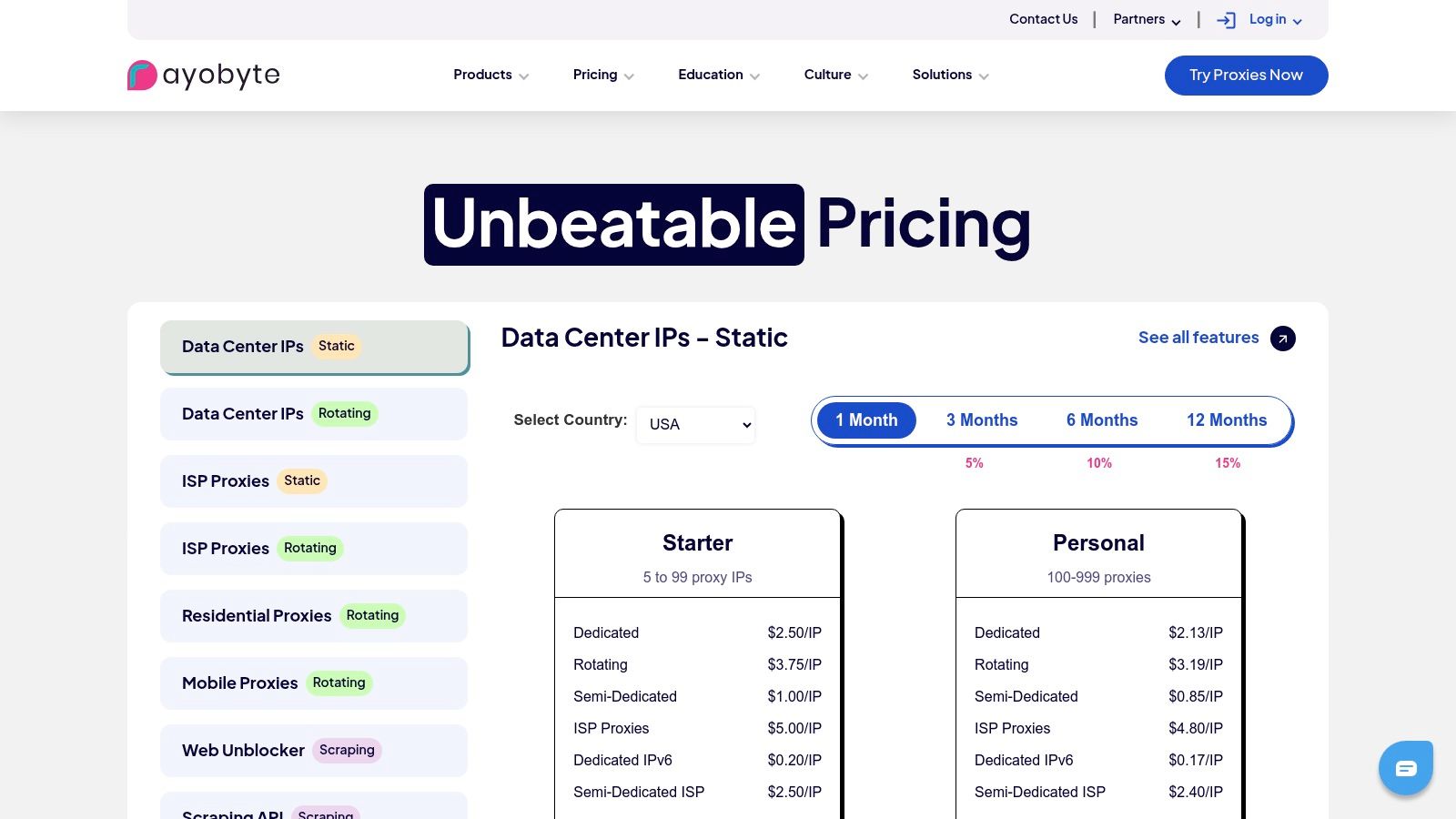

8. Rayobyte

Rayobyte, a US-based provider, carves out a niche by focusing on ethical sourcing, transparent pricing, and a strong American IP footprint. It offers a well-rounded suite of residential, ISP, and datacenter proxies, catering to users who value clear costs and reliable performance, particularly for US-centric data extraction tasks. The company’s commitment to ethically sourced residential IPs provides a layer of trust for businesses concerned with compliance and network integrity.

This provider stands out with its flexible and often user-friendly pricing models, including options for never-expiring residential bandwidth on certain plans. This is a significant advantage for developers with intermittent scraping needs, as they don't lose unused data at the end of a billing cycle. Rayobyte’s free trial for residential proxies also makes it an accessible option for those wanting to test its capabilities before committing to a larger plan.

Key Features and Considerations

Rayobyte's offerings are practical and straightforward, but it's good to know where they shine and what limitations exist.

Ethical Sourcing: A strong emphasis on ethically acquired residential IPs, which is a key differentiator for compliance-conscious organizations.

Pricing Transparency: Clear, upfront pricing across its product lines helps businesses forecast costs without hidden fees. Reseller programs also offer opportunities for agencies.

Flexible Bandwidth: Unique offers like never-expiring bandwidth provide excellent value for projects with fluctuating or unpredictable data requirements.

Feature Access: While the core product is robust, some advanced features or premium support may be tied to higher-tier custom or reseller plans.

Feature | Details |

|---|---|

Proxy Types | Residential, ISP, Datacenter (Rotating & Static) |

Geo-Targeting | Country, City, ASN |

Primary Use Cases | E-commerce monitoring, ad verification, social media |

Best For | SMBs, developers, resellers needing US-focused IPs |

Starting Price | $4/GB (Residential) |

Unique Selling Point | Ethical sourcing, never-expiring bandwidth options |

Ultimately, Rayobyte is one of the best proxies for web scraping for users who prioritize ethical practices, straightforward pricing, and a solid US presence. Its flexible plans are a great fit for small to medium-sized projects, though large-scale enterprise users might find more advanced tooling elsewhere.

Website: rayobyte.com/pricing/

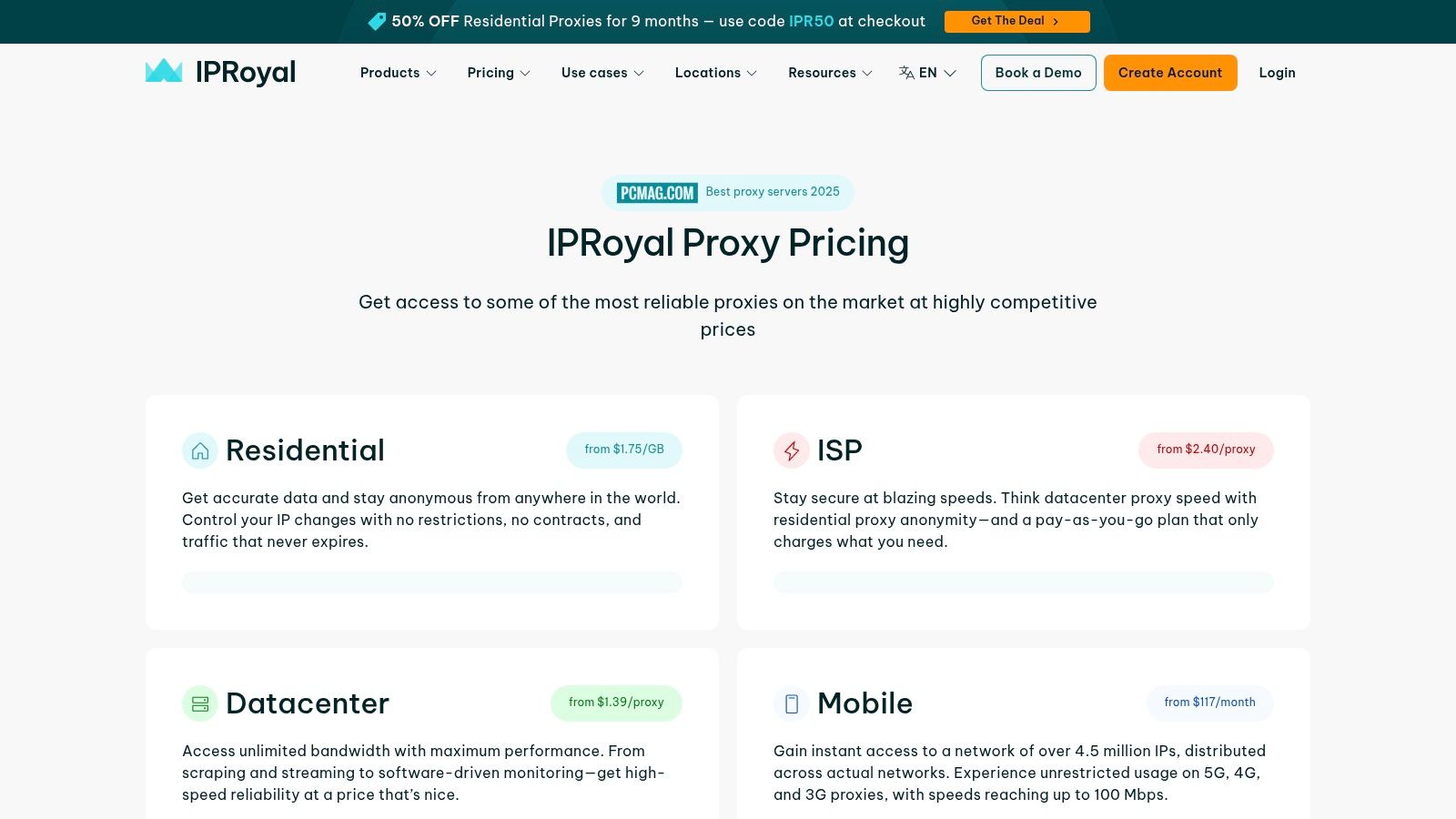

9. IPRoyal

IPRoyal carves out a niche in the market by focusing on affordability and flexibility, making it an attractive option for developers, small businesses, and individuals who are cost-conscious. It offers a solid range of proxy types, including residential, ISP, datacenter, and mobile, but its key differentiator is the "never-expiring" residential traffic model. This unique pricing structure allows users to purchase a data package that remains valid until it's fully used, removing the pressure of monthly subscription deadlines.

With a pool of over 32 million ethically sourced residential IPs, IPRoyal provides reliable geo-targeting capabilities across numerous countries. The platform supports both rotating and sticky sessions, giving scrapers control over their connection persistence. While it may not have the vast scale of enterprise providers, its straightforward dashboard and unlimited concurrent connections make it one of the best proxies for web scraping for projects like SERP analysis, social media data gathering, and e-commerce price tracking where budget is a primary consideration.

Key Features and Considerations

IPRoyal’s value proposition is centered on competitive pricing and user-friendly terms.

Never-Expiring Traffic: The option to buy residential proxy data that doesn't expire is perfect for projects with intermittent or unpredictable usage.

Pricing Strategy: The provider is known for frequent discounts and promotional offers, often making it one of the most budget-friendly choices on the market.

Performance: While effective for many common targets, complex websites with advanced anti-bot systems may require more sophisticated retry logic on the user's end.

Proxy Diversity: Access to residential, static residential (ISP), datacenter, and mobile proxies covers a wide range of scraping needs from a single provider.

Feature | Details |

|---|---|

Proxy Pool Size | 32M+ Residential IPs |

Geo-Targeting | Country, State, City |

Primary Use Cases | Price monitoring, ad verification, social media |

Best For | Small businesses, individual developers, budget projects |

Starting Price | $1.75/GB (Residential, larger plans) |

Unique Tooling | Chrome/Firefox extension, proxy tester |

In summary, IPRoyal is an excellent starting point for those entering the world of web scraping or for anyone needing a reliable, no-frills proxy service without a hefty price tag. Its straightforward approach and flexible pricing make it a strong contender for small to medium-scale operations.

Website: iproyal.com/pricing/

10. Webshare

Webshare offers a compelling entry point into the world of proxies, focusing on affordability and accessibility. As a low-cost datacenter proxy provider, it’s an excellent starting point for individual developers, students, or small projects testing the waters of web scraping. The platform strips away the complexity of enterprise-level solutions, offering a straightforward, self-service model that allows users to get started in minutes.

The standout feature is its generous "forever free" plan, which provides 10 shared proxies and a monthly bandwidth allowance. This is not a time-limited trial, making it a genuinely useful resource for low-volume tasks or for developers learning how to integrate proxies into their scrapers. This accessibility, combined with instant provisioning and simple billing, makes it a top choice for projects where budget is the primary constraint and performance on high-security websites is a secondary concern.

Key Features and Considerations

Webshare’s value proposition is its simplicity and low cost, but it's important to know the limitations.

Cost-Effectiveness: The free tier and extremely low-priced paid plans make it one of the most budget-friendly options available.

Instant Access: The sign-up and proxy provisioning process is fully automated and immediate, with no lengthy verification required.

IP Quality: As datacenter proxies, especially shared ones, they are more susceptible to blocks from sophisticated targets compared to residential or mobile IPs.

Use Case Suitability: Ideal for scraping less protected websites, API testing, or as a learning tool for proxy integration.

Feature | Details |

|---|---|

Proxy Pool Size | 100K+ Datacenter IPs |

Geo-Targeting | Country-level (Limited compared to premium services) |

Primary Use Cases | Small-scale projects, testing, educational purposes |

Best For | Students, hobbyists, developers on a tight budget |

Starting Price | Free (10 proxies, 1GB/month) |

Unique Tooling | Simple dashboard for proxy list management |

Overall, Webshare is one of the best proxies for web scraping for those who prioritize low cost and ease of use over raw power and unblockability. It's an invaluable tool for getting started but may require an upgrade to a more robust provider for challenging, large-scale data extraction tasks.

Website: https://www.webshare.io/pricing

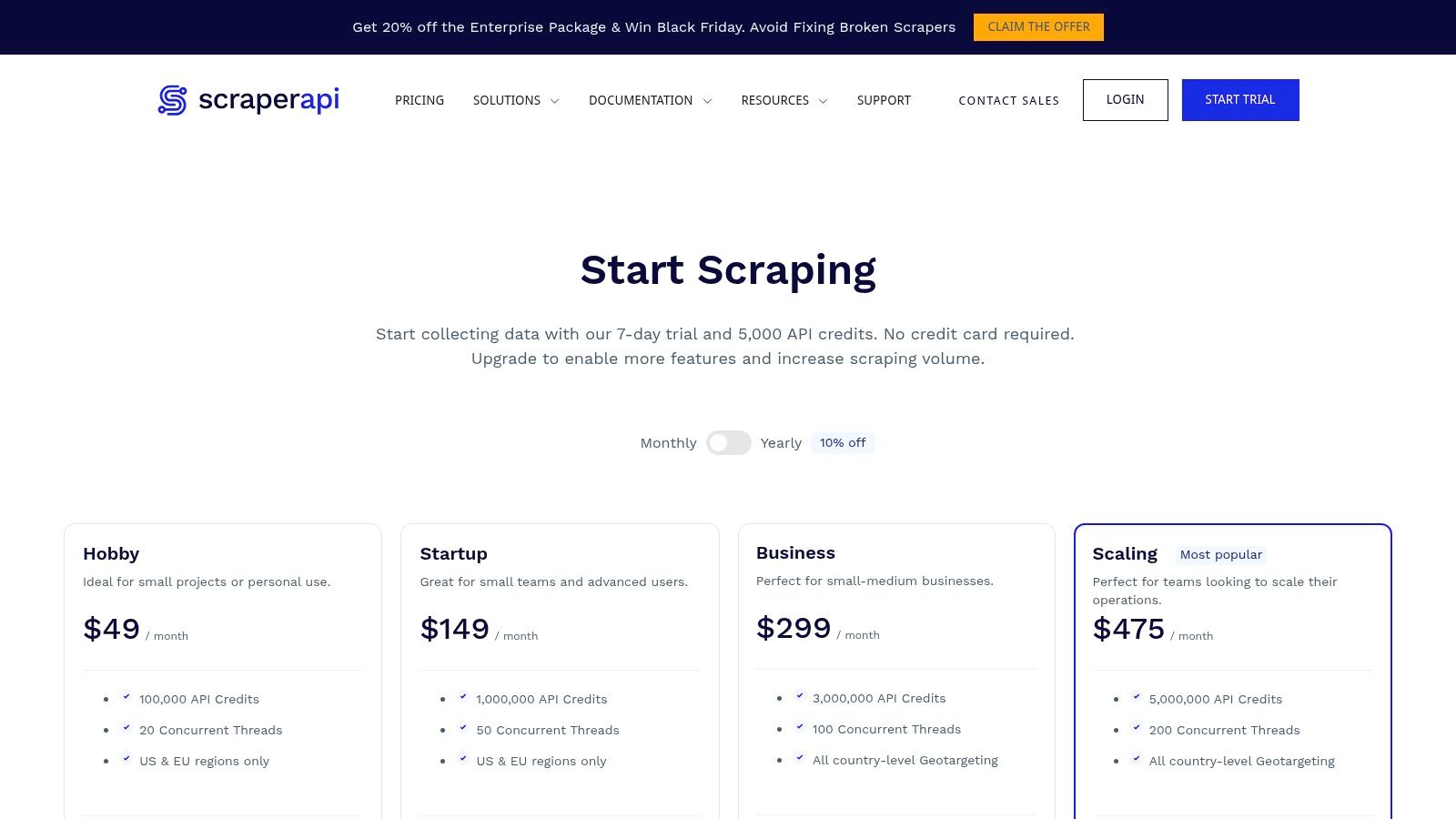

11. ScraperAPI

ScraperAPI takes a different approach by bundling proxy management into a simple API call. Instead of providing raw proxy lists, it acts as a full-service scraping solution, handling proxy rotation, CAPTCHAs, and JavaScript rendering automatically. This makes it an ideal choice for developers who want to focus on data extraction rather than the complexities of building and maintaining proxy infrastructure. It abstracts away the need to manage IP pools and retry logic.

The platform is built for ease of use. You send your target URL to the API endpoint, and ScraperAPI returns the clean HTML from a successful request. It utilizes a mix of residential, mobile, and datacenter IPs to achieve high success rates. This integrated system is particularly effective for standard scraping tasks like e-commerce price gathering or SERP data collection, where the underlying proxy mechanics are less important than the final result. For a guide on how to integrate such services, see our tutorial on using proxies with Python Requests.

Key Features and Considerations

ScraperAPI’s value proposition is its simplicity and predictable pricing model.

Managed Infrastructure: The service automatically handles all aspects of proxy rotation, user-agent management, and browser rendering, significantly reducing development overhead.

Pricing Model: Costs are based on successful API credits, offering predictable expenses tied directly to your usage. A generous 7-day trial with 5,000 free credits allows for thorough testing.

Loss of Control: The trade-off for convenience is a lack of direct control. You cannot select specific IPs or manage session persistence manually, which may be a limitation for advanced use cases.

Geo-Targeting Limits: While country-level targeting is available, access is restricted on lower-tier plans, which are primarily focused on the US and EU.

Feature | Details |

|---|---|

Proxy Pool Size | 40M+ IPs (Residential, Datacenter, Mobile) |

Geo-Targeting | Country-level (limited on basic plans) |

Primary Use Cases | E-commerce, SERP data, general web scraping |

Best For | Developers seeking a hands-off proxy solution |

Starting Price | $49/month for 100,000 API credits |

Unique Tooling | Simple API endpoint, analytics dashboard |

Ultimately, ScraperAPI is one of the best proxies for web scraping when your goal is to outsource infrastructure management. It’s perfect for teams that value speed of development and predictable costs over granular proxy control.

Website: scraperapi.com

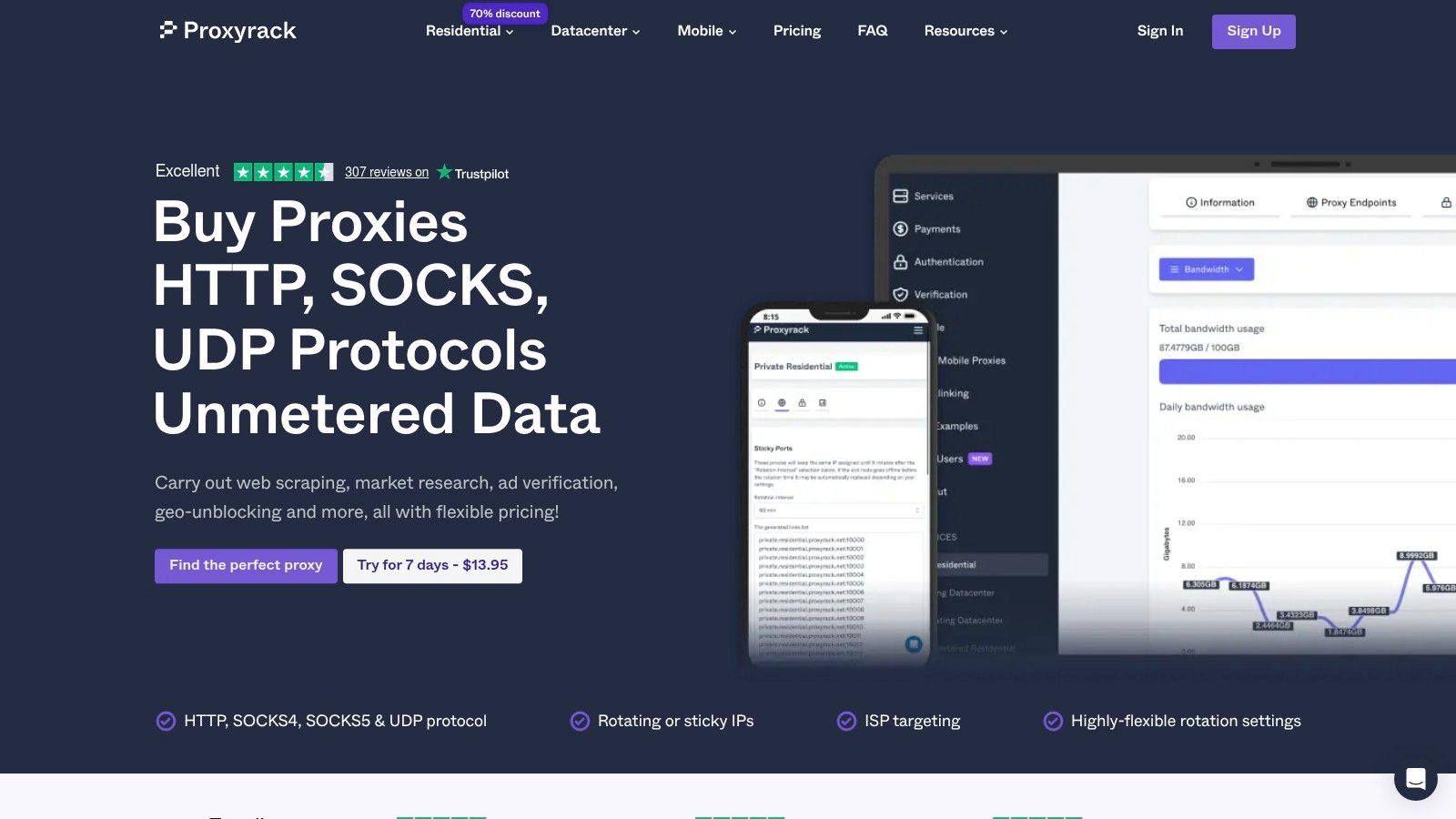

12. Proxyrack

Proxyrack stands out in the proxy market by offering a unique pricing model that caters to high-volume, consistent scraping operations. While it provides standard metered plans, its unmetered residential proxy plans are the main attraction. This approach, priced by the number of concurrent threads rather than data usage, is a game-changer for projects where gigabyte-based billing is unpredictable and costly.

This provider offers a versatile portfolio, including residential, datacenter, and ISP proxies, with support for HTTP, SOCKS, and UDP protocols. The flexibility makes it suitable for various scraping tasks, from simple data collection to more complex P2P activities. The dashboard provides live reporting, which is a practical tool for monitoring usage and troubleshooting connections in real-time, helping users optimize their thread allocation.

Key Features and Considerations

Proxyrack’s thread-based model is powerful but requires a good understanding of your project’s concurrency needs.

Unmetered Residential Proxies: The primary differentiator, allowing unlimited data usage on plans priced by concurrent connections. This is ideal for scraping large, media-light websites where bandwidth is less of a concern than the number of parallel requests.

Protocol Support: Broad support including HTTP(S), SOCKS5, and UDP accommodates a wide range of applications beyond standard web scraping.

Pricing Model: The thread-based pricing requires careful planning. Users must accurately estimate the number of parallel connections they need to avoid overpaying for unused threads or under-provisioning their scrapers.

Compliance: Lacks some of the advanced enterprise-level compliance and governance features found in top-tier providers, making it better suited for small-to-mid-sized teams.

Feature | Details |

|---|---|

Proxy Pool Size | 10M+ Residential IPs |

Geo-Targeting | Country, City, ISP |

Primary Use Cases | High-volume scraping, market research, brand protection |

Best For | Teams needing predictable costs for heavy scraping |

Starting Price | $49/month (Unmetered Residential - 100 Threads) |

Unique Tooling | Live connection reporting, multiple protocol support |

Ultimately, Proxyrack is among the best proxies for web scraping for users who prioritize predictable costs and high-volume data extraction. Its unmetered plans offer exceptional value if your scraping workload is consistent and can be scaled via concurrency.

Website: https://www.proxyrack.com/

Top 12 Web Scraping Proxies Comparison

Service | Core features (✨) | Quality (★) | Pricing & value (💰) | Best for (👥) | Why pick (🏆) |

|---|---|---|---|---|---|

ScrapeUnblocker 🏆 | ✨ Real-browser JS rendering, rotating residential proxies, raw HTML/JSON, city-level geotargeting, unlimited concurrency | ★★★★★ accuracy-first + hands-on QA/support | 💰 Simple per-request billing; predictable plans & custom limits (contact sales) | 👥 Devs, data teams, AI/ML, price/SEO/real‑estate monitoring | 🏆 All-in-one rendering+proxy+unblock API; accuracy guarantee |

Bright Data | ✨ 150M+ IPs, residential/mobile/ISP/datacenter, city/ZIP targeting | ★★★★☆ enterprise-grade, high uptime claims | 💰 PAYG & tiers; enterprise pricing; KYC onboarding | 👥 Enterprises needing global coverage & compliance | Broadest global IP coverage & tooling |

Oxylabs | ✨ Residential/mobile/ISP/datacenter + Web Unblocker & Scraper APIs | ★★★★☆ reliable, 99.9%+ uptime claims | 💰 Clear self-serve for small plans; enterprise on request | 👥 Enterprises & teams needing ISP/persistent sessions | ISP proxies & persistent-session options |

Decodo (Smartproxy) | ✨ 115M+ IPs, rotating & sticky sessions, city targeting | ★★★★☆ SMB-friendly, low-latency claims | 💰 Competitive promos; SMB-focused pricing | 👥 SMBs, startups and price-sensitive teams | Easy onboarding & frequent promotions |

Zyte | ✨ API-first unblocking, per-successful-request pricing, optional browser rendering | ★★★★☆ predictable per-request economics | 💰 Per-successful-request tiers; predictable billing | 👥 Teams needing request-level cost predictability | API-managed anti-bot + automated ban handling |

SOAX | ✨ 155M+ residential, mobile & ISP IPs; unified credits model | ★★★☆☆ price-competitive, decent coverage | 💰 Unified credits usable across proxy types; paid trial | 👥 Startups/growth teams valuing flexibility | Credits across proxy types; low trial cost |

NetNut | ✨ 85M+ residential IPs, unlimited concurrent connections, speed-focused | ★★★★☆ high-speed, production-ready | 💰 Volume tiers; scalable enterprise pricing | 👥 Production/enterprise scraping & high-throughput use | Emphasis on speed, uptime & unlimited concurrency |

Rayobyte | ✨ Residential/ISP/datacenter, transparent pricing, reseller programs | ★★★☆☆ US-focused reliability | 💰 Transparent/granular pricing; trials available | 👥 US-centric teams & resellers | Ethical sourcing messaging & reseller support |

IPRoyal | ✨ 32M+ residential IPs, never-expiring traffic options, frequent discounts | ★★★☆☆ budget-friendly with promos | 💰 Very competitive per-GB/IP on promotions | 👥 Cost-conscious starters & small teams | 'Never-expiring' traffic bundles & steep discounts |

Webshare | ✨ Low-cost datacenter proxies, free forever tier (10 proxies ~1GB) | ★★★☆☆ good for testing; shared IPs less reliable | 💰 Free entry-tier + inexpensive paid plans | 👥 Hobbyists, testers, low-volume projects | Free forever tier + instant provisioning |

ScraperAPI | ✨ Auto rotation, retries, headless rendering + premium IPs | ★★★★☆ reliable turnkey scraping | 💰 Credits-based; predictable URL/request pricing | 👥 Developers who want to avoid proxy infra | Turnkey scraping API with built-in retries & rendering |

Proxyrack | ✨ Unmetered residential/thread-based plans, datacenter & mobile options | ★★★☆☆ suited for heavy, consistent scraping | 💰 Unmetered thread pricing (no GB limits); sizing needed | 👥 Heavy scrapers needing steady, unmetered throughput | Unmetered/thread-based plans for consistent workloads |

From Proxies to Platforms: Making the Right Call

Navigating the complex landscape of web scraping tools can feel overwhelming, but after analyzing a dozen of the top solutions, a clear pattern emerges. The journey from raw proxy IP to structured data is filled with choices, and selecting the best proxies for web scraping is not about finding a single "best" provider, but about aligning your tools with your project's specific demands, technical expertise, and business objectives.

This guide has taken you through the entire spectrum, from dedicated proxy networks like Bright Data and Oxylabs to budget-friendly options like IPRoyal and Webshare, and finally to all-in-one scraping APIs like ScrapeUnblocker and ScraperAPI. The core takeaway is that the "right" choice hinges on a crucial trade-off: direct infrastructure management versus abstracted data access.

Key Takeaways: From Raw IPs to Successful Requests

If your team possesses deep expertise in web scraping architecture, the granular control offered by a dedicated proxy provider is invaluable. You can fine-tune every aspect of your operation, from IP rotation logic and session management to fingerprinting and header customization.

However, this control comes at a cost, not just in subscription fees but in engineering hours. The time spent debugging failed requests, managing IP bans, solving CAPTCHAs, and reverse-engineering anti-bot systems is a significant operational overhead. For many teams, this time is better spent analyzing the data itself, not wrestling with the infrastructure to get it.

This is where the paradigm shifts from buying proxies to buying successful requests. The key considerations boil down to:

Target Complexity: Is your target a simple HTML site or a dynamic, JavaScript-heavy application protected by Cloudflare, Akamai, or PerimeterX? The more complex the target, the more an integrated platform shines.

Team Resources: Do you have dedicated engineers to manage proxy infrastructure, or do you need a solution that "just works" out of the box? Your team's bandwidth is a critical factor.

Cost Model: Are you optimizing for the lowest cost per gigabyte, or the lowest cost per successful data point? Factoring in development time and failure rates often reveals that a higher-priced API is more cost-effective in the long run.

Your Final Decision Checklist

Before you commit to a provider, run through this final checklist to ensure you're making a strategic decision, not just a tactical one.

Define Your True Goal: Are you in the business of managing proxies or acquiring data? Be honest about where your team's core competency lies and where you want to focus your efforts.

Calculate the Total Cost of Ownership (TCO): Don't just look at the sticker price. Factor in the engineering hours required for integration, maintenance, and handling blocks. A simple calculation might look like: .

Evaluate Your Targets: Classify your target websites by difficulty. For the most challenging 20% of your targets, a raw proxy network may yield diminishing returns. This is the prime use case for a specialized scraping API.

Prototype and Benchmark: Never skip the free trial. Test your most difficult target URLs with your top 2-3 contenders. Measure success rates, response times, and the quality of the returned data. This empirical evidence is more valuable than any marketing claim.

Ultimately, the evolution of the web scraping industry shows a clear trend toward abstraction and specialization. Just as businesses moved from managing their own servers to using cloud platforms like AWS, data teams are increasingly moving from managing their own proxy pools to using intelligent scraping platforms. This allows them to offload the cat-and-mouse game of anti-bot evasion and focus on their primary mission: transforming raw web data into actionable business intelligence.

Ready to stop managing infrastructure and start getting data? ScrapeUnblocker provides a fully integrated web scraping API that handles everything for you, from premium residential proxies and headless browser rendering to CAPTCHA solving and dynamic fingerprinting. Sign up for a free trial at ScrapeUnblocker and experience the power of paying only for successful requests.

Comments